Spotting AI Bots: Real-time User Agent Analysis with Python & SQLite

Setting the Stage: Context for the Curious Book Reader

This essay delves into an important methodology for understanding the digital frontier in the Age of AI: discerning automated machine traffic from human interaction on web servers. As AI systems become more prevalent, their digital footprints often mingle with those of human users, creating a deceptive blur in analytics. Here, we lay out a practical way to refine raw log data into actionable intelligence, ensuring that you can clearly see the emerging landscape of AI agents interacting with your content and services. This hands-on blueprint demonstrates how to cut through the noise, making the invisible visible, and providing a crucial edge in monitoring the evolving web.

Technical Journal Entry Begins

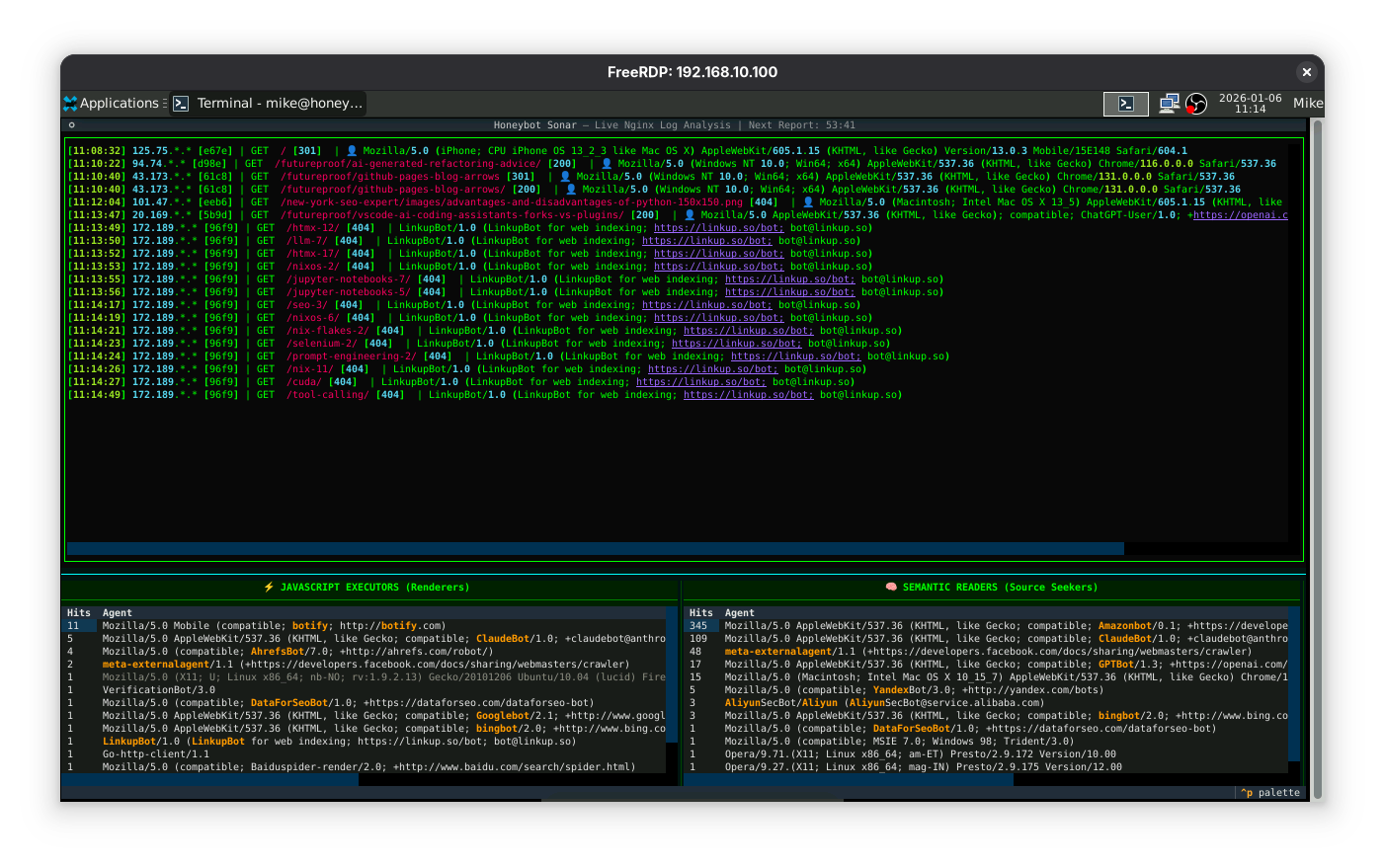

Alright, we rolled past 10:00 AM on a Tuesday morning with the client call at 3:00 PM and we will switch modes by 11:00 AM from the couple of queued-up sub-projects that are each of the chisel-strike non-rabbithole variety that change my developer life in a positive way forever forward. Actually, the first is a bit of eye-candy to make a point concerning my recent discoveries concerning crawlers and bots visiting your sites, AI-bots in particular. Let’s see how light-touch I can make this and quickly I can slam out this big win. I characterized it as eye-candy but it’s really much more.

The Immediate Goal: Spotting AI-Bot Traffic

I’ll recycle the last Gemini 3 Pro discussion thread I’ve been using so that it

will have all the context leading up to where we are. But I simply want to

color-code the occurrences of bot names such as ClaudeBot, bingbot and the rest

from a known list, knowable probably even from the discussion context so far

with me not having to spell them out. However, what I do need to do is to show

Gemini the reporting system and YouTube streaming system that’s showing the

current logs.py report with color coding very similar to what I’m looking for,

but on weblog fields. This time I want to simply color highlight the known

bot-names within the UserAgent strings that we’re showing in the 2 lower panel

reports labeled “JAVASCRIPT EXECUTERS” AND “SEMANTIC READERS”.

User Agent Logs: A Glimpse into Who’s Visiting

See, it is simple as that. When you see something like:

┌─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

│ ⚡ JAVASCRIPT EXECUTORS (MathJax Resource Fetch) │

│ Hits Agent │

│ 193 Mozilla/5.0 (X11; Linux x86_64; rv:146.0) Gecko/20100101 Firefox/146.0 │

│ 11 Mozilla/5.0 Mobile (compatible; botify; http://botify.com) │

│ 10 Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/139.0.0.0 Safari/537.36 │

│ 9 Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/142.0.0.0 Safari/537.36 │

│ 5 Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; ClaudeBot/1.0; +claudebot@anthropic.com) │

│ 4 Mozilla/5.0 (compatible; AhrefsBot/7.0; +http://ahrefs.com/robot/) │

│ 2 Mozilla/5.0 (iPhone; CPU iPhone OS 18_6_2 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/18.6 Mobile/15E148 Safari/604.1 │

│ 2 Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/143.0.0.0 Safari/537.36 │

│ 2 Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/139.0.0.0 Safari/537.36 │

│ 2 Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36 │

│ 2 meta-externalagent/1.1 (+https://developers.facebook.com/docs/sharing/webmasters/crawler) │

│ 1 Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.6778.33 Safari/537.36 │

│ 1 Mozilla/5.0 (X11; U; Linux x86_64; nb-NO; rv:1.9.2.13) Gecko/20101206 Ubuntu/10.04 (lucid) Firefox/3.6.13 │

│ 1 Mozilla/5.0 (Linux; Android 11; V2055A) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.101 Mobile Safari/537.36 │

│ 1 VerificationBot/3.0 │

│ 1 Mozilla/5.0 (compatible; DataForSeoBot/1.0; +https://dataforseo.com/dataforseo-bot) │

│ 1 Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; Googlebot/2.1; +http://www.google.com/bot.html) Chrome/141.0.7390.122 Safa... │

│ 1 Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/143.0.0.0 Safari/537.36 │

│ 1 Mozilla/5.0 (X11; Linux x86_64; rv:145.0) Gecko/20100101 Firefox/145.0 │

│ 1 Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/124.0.0.0 Safari/537.36 │

└─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┘

┌─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

│ 🧠 SEMANTIC READERS (Source Markdown Fetch) │

│ Hits Agent │

│ 345 Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; Amazonbot/0.1; +https://developer.amazon.com/support/amazonbot) Chrome/119... │

│ 109 Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; ClaudeBot/1.0; +claudebot@anthropic.com) │

│ 48 meta-externalagent/1.1 (+https://developers.facebook.com/docs/sharing/webmasters/crawler) │

│ 40 Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Safari/537.36 │

│ 17 Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.3; +https://openai.com/gptbot) │

│ 14 Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36; compatible; OAI-Se... │

│ 6 Mozilla/5.0 (iPhone; CPU iPhone OS 13_2_3 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.0.3 Mobile/15E148 Safari/604.1 │

│ 5 Mozilla/5.0 (compatible; YandexBot/3.0; +http://yandex.com/bots) │

│ 3 AliyunSecBot/Aliyun (AliyunSecBot@service.alibaba.com) │

│ 3 Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm) Chrome/116.0.1938.76 Safari... │

│ 1 Mozilla/5.0 (compatible; DataForSeoBot/1.0; +https://dataforseo.com/dataforseo-bot) │

│ 1 Mozilla/5.0 (compatible; MSIE 7.0; Windows 98; Trident/3.0) │

│ 1 Mozilla/5.0 (Macintosh; Intel Mac OS X 12_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1264.71 │

│ 1 Mozilla/5.0 (Windows; U; Windows NT 5.0) AppleWebKit/531.35.5 (KHTML, like Gecko) Version/4.0.5 Safari/531.35.5 │

│ 1 Mozilla/5.0 (iPhone; CPU iPhone OS 17_4 like Mac OS X) AppleWebKit/535.1 (KHTML, like Gecko) CriOS/44.0.867.0 Mobile/10E039 Safari/535.1 │

│ 1 Opera/9.71.(X11; Linux x86_64; am-ET) Presto/2.9.172 Version/10.00 │

│ 1 Opera/9.27.(X11; Linux x86_64; mag-IN) Presto/2.9.175 Version/12.00 │

│ 1 Mozilla/5.0 (compatible; MSIE 7.0; Windows NT 6.1; Trident/5.0) │

│ 1 Mozilla/5.0 (iPhone; CPU iPhone OS 1_1_5 like Mac OS X) AppleWebKit/533.0 (KHTML, like Gecko) CriOS/36.0.843.0 Mobile/13O940 Safari/533.0 │

│ 1 Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 OPR/89.0.4447.51 │

└─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┘

…what you do is you highlight words such as Amazonbot and anything else that’s consistent with what you know that I’m looking for here because you’re not stupid and can refer to things in your already existing training data or could even perform web searches to ferret up more possibilities or a good list. And then the only other stuff your really need at this point is the codebase itself that you’ll be providing the implementation instructions for.

So the whole process is pushed out to the webserver with:

deploy_honeybot.sh

…which is what lets me keep all the stuff about the DMZ honeybot nginx webhead

webserver actually in the pipulate/ repo even though the blog content is

coming from the trimnoir/ repo. The IaC stuff is mostly defined by Pipulate

with:

remotes/honeybot/nixos/configuration.nix

…which the deploy file puts in place along with everything from:

remotes/honeybot/scripts/

And so many things from that directory get put into the painting of the Prompt Fu context here, namely:

remotes/honeybot/scripts/content_loader.py

remotes/honeybot/scripts/stream.py

remotes/honeybot/scripts/db.py

remotes/honeybot/scripts/report.py

remotes/honeybot/scripts/logs.py

…which pretty much covers everything I think Gemini needs to see to help me with the implementation plan. And so all together, that’s:

Assembling the Codebase Context for AI

AI_PHOOEY_CHOP = """\

deploy_honeybot.sh

remotes/honeybot/nixos/configuration.nix

remotes/honeybot/scripts/content_loader.py

remotes/honeybot/scripts/stream.py

remotes/honeybot/scripts/db.py

remotes/honeybot/scripts/report.py

remotes/honeybot/scripts/logs.py

"""

And after such exposition including this article and the overflow in the context

window of Gemini from the prior articles and the complete codebase inclusion we

just affected, I hardly even need to articulate anymore of this request. Gemini

has all it needs to continue providing a detailed implementation plan for me to

get the color-coded useragents like I want on logs.py except maybe a please

and thank you!

Refining the Signal: Filtering Human Noise and Simplifying the Palette

Gemini 3 Pro: This is the perfect “Chisel Strike” project. It’s high-impact visual feedback with low-risk code changes. We are simply going to teach logs.py how to dress up the data it’s already fetching.

Phase One: “Technicolor Intelligence” via Color-Coded User Agents

Here is the implementation plan to bring “Technicolor Intelligence” to your dashboards.

The Plan

- Define the Palette: Create a mapping of bot substrings to Rich styles (colors).

- Create the Stylist: Add a helper function

stylize_agent(agent_string)that converts raw strings intorich.Textobjects with highlighting applied. - Apply the Makeup: Update

refresh_tablesto use this new function instead of printing raw strings.

The Code

You only need to edit remotes/honeybot/scripts/logs.py.

1. Add the Bot Configuration

Add this near the top of logs.py, right after the LOG_PATTERN definition. This is your “Registry of Known Entities.”

# ... existing imports ...

# --- Configuration ---

ANSI_ESCAPE = re.compile(r'(?:\x1B[@-_]|[\x80-\x9F])[0-?]*[ -/]*[@-~]')

LOG_PATTERN = re.compile(r'(?P<ip>[\d\.]+) - - \[(?P<time>.*?)\] "(?P<request>.*?)" (?P<status>\d+) (?P<bytes>\d+) "(?P<referrer>.*?)" "(?P<ua>.*?)"')

# NEW: The Bot Highlighting Palette

BOT_HIGHLIGHTS = {

# AI & LLMs

"ClaudeBot": "bold #d97757", # Anthropic Clay

"GPTBot": "bold #10a37f", # OpenAI Green

"OAI-SearchBot": "bold #10a37f", # OpenAI Green

"PerplexityBot": "bold #22b5bf", # Perplexity Teal

# Big Tech / Cloud

"Amazonbot": "bold #ff9900", # Amazon Orange

"Googlebot": "bold #4285f4", # Google Blue

"bingbot": "bold #008373", # Bing Teal

"meta-externalagent": "bold #0668e1", # Meta Blue

"Applebot": "bold #A2AAAD", # Apple Silver

"Aliyun": "bold #ff6a00", # Alibaba Orange

# Search & SEO

"Yandex": "bold #fc3f1d", # Yandex Red

"AhrefsBot": "bold #fd6917", # Ahrefs Orange

"DataForSeoBot": "bold magenta",

"SemrushBot": "bold orange1",

"DotBot": "bold green",

"LinkupBot": "bold cyan",

}

2. Add the Helper Function

Add this method inside the SonarApp class. I recommend placing it right before refresh_tables for logical grouping.

def stylize_agent(self, agent_str):

"""Converts a raw UA string into a Rich Text object with highlights."""

agent_str = agent_str.strip()

text = Text(agent_str)

# Default styling for common browsers to push them to background

if "Mozilla" in agent_str and "compatible" not in agent_str:

text.stylize("dim white")

# Highlight specific bots

for bot_name, style in BOT_HIGHLIGHTS.items():

if bot_name in agent_str:

# We use regex to find the specific substring and color it

# distinctively against the rest of the string

text.highlight_regex(re.escape(bot_name), style)

# Optional: If you want the WHOLE line to pop when a bot is found:

# text.stylize(style)

return text

3. Update refresh_tables

Modify your existing refresh_tables method to call the stylist.

def refresh_tables(self):

"""Updates both Intelligence tables from DB."""

if not db: return

# 1. Update JS Executors (Left)

try:

table = self.query_one("#js_table", DataTable)

table.clear()

# Fetch plenty, let UI clip

data = db.get_js_executors(limit=50)

if not data:

table.add_row("-", "Waiting for data...")

else:

for ua, count in data:

# CHANGED: Wrap in stylize_agent

table.add_row(str(count), self.stylize_agent(ua))

except: pass

# 2. Update Markdown Readers (Right)

try:

table = self.query_one("#md_table", DataTable)

table.clear()

# Fetch plenty, let UI clip

data = db.get_markdown_readers(limit=50)

if not data:

table.add_row("-", "Waiting for data...")

else:

for ua, count in data:

# CHANGED: Wrap in stylize_agent

table.add_row(str(count), self.stylize_agent(ua))

except: pass

The Result

When you deploy this (./deploy_honeybot.sh), the next time you look at the tables:

- Amazonbot will burn in Amazon Orange.

- ClaudeBot will stand out in Anthropic Clay.

- Googlebot (if it ever shows up in the Markdown column) will be Google Blue.

- Standard user traffic will fade into

dim white, effectively creating a visual filter that pops the signal (bots) from the noise (browsers).

This is a classic “dashboarding” win: increasing information density without adding clutter. Good luck on the 3:00 PM call!

Me: And that gives us this diff:

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$ git status

On branch main

Your branch is up to date with 'origin/main'.

nothing to commit, working tree clean

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$ vim logs.py

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$ git --no-pager diff

diff --git a/remotes/honeybot/scripts/logs.py b/remotes/honeybot/scripts/logs.py

index 4afdf157..4a562ebd 100644

--- a/remotes/honeybot/scripts/logs.py

+++ b/remotes/honeybot/scripts/logs.py

@@ -27,6 +27,37 @@ except ImportError:

ANSI_ESCAPE = re.compile(r'(?:\x1B[@-_]|[\x80-\x9F])[0-?]*[ -/]*[@-~]')

LOG_PATTERN = re.compile(r'(?P<ip>[\d\.]+) - - \[(?P<time>.*?)\] "(?P<request>.*?)" (?P<status>\d+) (?P<bytes>\d+) "(?P<referrer>.*?)" "(?P<ua>.*?)"')

+# ... existing imports ...

+

+# --- Configuration ---

+ANSI_ESCAPE = re.compile(r'(?:\x1B[@-_]|[\x80-\x9F])[0-?]*[ -/]*[@-~]')

+LOG_PATTERN = re.compile(r'(?P<ip>[\d\.]+) - - \[(?P<time>.*?)\] "(?P<request>.*?)" (?P<status>\d+) (?P<bytes>\d+) "(?P<referrer>.*?)" "(?P<ua>.*?)"')

+

+# NEW: The Bot Highlighting Palette

+BOT_HIGHLIGHTS = {

+ # AI & LLMs

+ "ClaudeBot": "bold #d97757", # Anthropic Clay

+ "GPTBot": "bold #10a37f", # OpenAI Green

+ "OAI-SearchBot": "bold #10a37f", # OpenAI Green

+ "PerplexityBot": "bold #22b5bf", # Perplexity Teal

+

+ # Big Tech / Cloud

+ "Amazonbot": "bold #ff9900", # Amazon Orange

+ "Googlebot": "bold #4285f4", # Google Blue

+ "bingbot": "bold #008373", # Bing Teal

+ "meta-externalagent": "bold #0668e1", # Meta Blue

+ "Applebot": "bold #A2AAAD", # Apple Silver

+ "Aliyun": "bold #ff6a00", # Alibaba Orange

+

+ # Search & SEO

+ "Yandex": "bold #fc3f1d", # Yandex Red

+ "AhrefsBot": "bold #fd6917", # Ahrefs Orange

+ "DataForSeoBot": "bold magenta",

+ "SemrushBot": "bold orange1",

+ "DotBot": "bold green",

+ "LinkupBot": "bold cyan",

+}

+

class SonarApp(App):

"""The Cybernetic HUD (Dual-Panel Edition)."""

@@ -131,6 +162,27 @@ class SonarApp(App):

self.set_interval(1, self.update_countdown)

self.set_interval(5, self.refresh_tables) # Refresh data every 5s

+ def stylize_agent(self, agent_str):

+ """Converts a raw UA string into a Rich Text object with highlights."""

+ agent_str = agent_str.strip()

+ text = Text(agent_str)

+

+ # Default styling for common browsers to push them to background

+ if "Mozilla" in agent_str and "compatible" not in agent_str:

+ text.stylize("dim white")

+

+ # Highlight specific bots

+ for bot_name, style in BOT_HIGHLIGHTS.items():

+ if bot_name in agent_str:

+ # We use regex to find the specific substring and color it

+ # distinctively against the rest of the string

+ text.highlight_regex(re.escape(bot_name), style)

+

+ # Optional: If you want the WHOLE line to pop when a bot is found:

+ # text.stylize(style)

+

+ return text

+

def refresh_tables(self):

"""Updates both Intelligence tables from DB."""

if not db: return

@@ -145,7 +197,8 @@ class SonarApp(App):

table.add_row("-", "Waiting for data...")

else:

for ua, count in data:

- table.add_row(str(count), ua.strip())

+ # CHANGED: Wrap in stylize_agent

+ table.add_row(str(count), self.stylize_agent(ua))

except: pass

# 2. Update Markdown Readers (Right)

@@ -158,7 +211,8 @@ class SonarApp(App):

table.add_row("-", "Waiting for data...")

else:

for ua, count in data:

- table.add_row(str(count), ua.strip())

+ # CHANGED: Wrap in stylize_agent

+ table.add_row(str(count), self.stylize_agent(ua))

except: pass

def update_countdown(self):

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$

And I deploy. And here we are spotting AI Bot UserAgents:

Wow, this went very well. The only things that occur to me now is that there’s too much ongoing friction in having to assign colors to useragents as we identify them. It’s fine for them all to be highlighted in the same color. I don’t want to have to play that mental game of this-is-this color and that-is-that color. Just choose the best color for all of them. Looking at it I think the bright orange you used for AmazonBot is best.

The other thing that I think is important at this time is to actually filter out things that are absolutely undeniably just mere web browsers in the hands of a human. We don’t have that many lines to display thing things of interest, the bots, so we certainly want to filter out lines like:

193 Mozilla/5.0 (X11; Linux x86_64; rv:146.0) Gecko/20100101 Firefox/146.0 │

10 Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/139.0.0.0 Safari/537.36 │

…from showing. Everything about those strings and the counts screams human-with-browser. However I appreciate there is a deny-all first versus an allow-all first issue here and I don’t want to over-filter. We should probably come up with some technique that favors letting things show so that all the usual suspects concentrate up near the top and things that poke through from the lower regions are candidates for consideration with the bot list. That way the friction is really the good stuff. We see new bot candidate. We either filter it from further display or add it to the list of those that become color-coded.

I am also aware that the new candidates may be appearing in lower-lines that

this logs.py report chops off, but that’s fine because I’m always working on

other non-displaying report.py (and the like) to look farther down in these

lists. Please help me make the next round of improvements this describes and I

welcome you thinking through the filtering parts that I’m not sure the best

solution for, please and thank you.

Gemini 3 Pro: This is a classic “Signal-to-Noise” refinement. You have colorized the signal, but the noise (standard browsers) is still taking up valuable screen real estate.

The Strategy

- Simplify the Palette: Instead of a rainbow of specific bot colors, we will use a single, high-contrast “Bot Orange” (

bold #ff9900) for everything in the known list. This removes the cognitive load of color-matching. - Filter the Noise: We will modify the SQL queries in

db.pyto actively exclude known “boring” browser signatures. This is safer than trying to filter in the UI because it frees up theLIMITslots for interesting, long-tail traffic. - The “Candidate” Approach: You are right; we want to see the weird stuff. By filtering out the obviously boring stuff (Chrome on Windows, Firefox on Linux), the “weird stuff” (new bots, scrapers, unusual devices) will naturally float up into the visible table slots.

Implementation Plan

We need to touch two files:

remotes/honeybot/scripts/logs.py: Simplify the color logic.remotes/honeybot/scripts/db.py: Add SQL filtering to exclude standard browsers.

Step 1: Simplify Colors in logs.py

We will replace the complex BOT_HIGHLIGHTS dict with a simple list of known bots, and apply a uniform style.

remotes/honeybot/scripts/logs.py

# ... imports ...

# NEW: Single List, Single Color

KNOWN_BOTS = [

"ClaudeBot", "GPTBot", "OAI-SearchBot", "PerplexityBot",

"Amazonbot", "Googlebot", "bingbot", "meta-externalagent",

"Applebot", "Aliyun", "Yandex", "AhrefsBot", "DataForSeoBot",

"SemrushBot", "DotBot", "LinkupBot", "botify", "PetalBot",

"Bytespider", "Barkrowler"

]

BOT_STYLE = "bold #ff9900" # Amazon Orange

# ... existing code ...

def stylize_agent(self, agent_str):

"""Converts a raw UA string into a Rich Text object with highlights."""

agent_str = agent_str.strip()

text = Text(agent_str)

# Default styling

if "Mozilla" in agent_str and "compatible" not in agent_str:

text.stylize("dim white")

# Highlight ANY known bot

for bot_name in KNOWN_BOTS:

if bot_name in agent_str:

text.highlight_regex(re.escape(bot_name), BOT_STYLE)

return text

Step 2: Filter Noise in db.py

We will modify the queries to exclude rows where the User Agent looks like a standard browser and doesn’t contain a bot keyword. This is the “Allow-All except Deny-Obvious” strategy.

remotes/honeybot/scripts/db.py

# Helper to construct the exclusion clause

# We filter out UAs that are "Mozilla" but NOT "compatible" (which bots often use)

# AND contain typical platform strings.

_BROWSER_FILTER = """

AND NOT (

ua.value LIKE 'Mozilla%'

AND ua.value NOT LIKE '%compatible%'

AND (

ua.value LIKE '%Windows NT%' OR

ua.value LIKE '%Macintosh%' OR

ua.value LIKE '%X11; Linux%' OR

ua.value LIKE '%iPhone%' OR

ua.value LIKE '%Android%'

)

)

"""

def get_js_executors(self, limit=20): # Increased default limit slightly

conn = self.get_conn()

cur = conn.cursor()

sql = f"""

SELECT ua.value, SUM(logs.count) as total

FROM daily_logs logs

JOIN user_agents ua ON logs.ua_id = ua.id

JOIN paths p ON logs.path_id = p.id

WHERE (p.value LIKE '%mathjax%' OR p.value LIKE '%d3.v7.min.js%')

AND p.value NOT LIKE '%.html'

{self._BROWSER_FILTER} /* Apply Noise Filter */

GROUP BY ua.id

ORDER BY total DESC

LIMIT ?

"""

cur.execute(sql, (limit,))

return cur.fetchall()

def get_markdown_readers(self, limit=20):

conn = self.get_conn()

cur = conn.cursor()

sql = f"""

SELECT ua.value, SUM(logs.count) as total

FROM daily_logs logs

JOIN user_agents ua ON logs.ua_id = ua.id

JOIN paths p ON logs.path_id = p.id

WHERE p.value LIKE '%.md'

{self._BROWSER_FILTER} /* Apply Noise Filter */

GROUP BY ua.id

ORDER BY total DESC

LIMIT ?

"""

cur.execute(sql, (limit,))

return cur.fetchall()

Why this is better:

- Orange Alert: Any bot is now visually distinct without you needing to remember if “Teal” means Bing or Perplexity.

- The “Poke Through”: By filtering out

Mozilla...Windows NT...Chrome, you remove the 193 hits of noise. This creates a vacuum in theLIMIT 20that will be filled by the next-highest count UAs—likely obscure bots, scrapers, or tools you haven’t identified yet. - Discovery: When you see a plain white entry in the table that isn’t a standard browser (because standard browsers are filtered), you know it’s a New Candidate. You simply add its name to

KNOWN_BOTSinlogs.py, deploy, and it turns orange.

Shall I generate the exact git diffs for this two-pronged attack?

Me: No thank you. I generate my own git diffs, thankyouverymuch. It’s surprising how Gemini 3 Pro doesn’t just realize along the way that the purpose of me doing the git diffs is to defend against generative drift and actually asking for the git diffs to be generatively produced, which would completely defeat the purpose. Git has all sorts of defenses against pitfalls built-in geared for the pre-AI-world. It just so happens that showing the diffs after applying something that was generatively produced… oh, I don’t know the word for it. But it’s something like pinning up the any potential mistakes onto the corkboard for close examination under a magnifying glass. If there is generative drift that does stuff outside the scope of the request it lights up like an XMas tree.

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$ vim logs.py

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$ git --no-pager diff

diff --git a/remotes/honeybot/scripts/logs.py b/remotes/honeybot/scripts/logs.py

index 4a562ebd..ecd62eed 100644

--- a/remotes/honeybot/scripts/logs.py

+++ b/remotes/honeybot/scripts/logs.py

@@ -18,6 +18,17 @@ from textual.widgets import Header, Footer, Static, Log, Label, Markdown, DataTa

from textual import work

from rich.text import Text

+# NEW: Single List, Single Color

+KNOWN_BOTS = [

+ "ClaudeBot", "GPTBot", "OAI-SearchBot", "PerplexityBot",

+ "Amazonbot", "Googlebot", "bingbot", "meta-externalagent",

+ "Applebot", "Aliyun", "Yandex", "AhrefsBot", "DataForSeoBot",

+ "SemrushBot", "DotBot", "LinkupBot", "botify", "PetalBot",

+ "Bytespider", "Barkrowler"

+]

+

+BOT_STYLE = "bold #ff9900" # Amazon Orange

+

try:

from db import db # Import our new database singleton

except ImportError:

@@ -167,19 +178,14 @@ class SonarApp(App):

agent_str = agent_str.strip()

text = Text(agent_str)

- # Default styling for common browsers to push them to background

+ # Default styling

if "Mozilla" in agent_str and "compatible" not in agent_str:

text.stylize("dim white")

- # Highlight specific bots

- for bot_name, style in BOT_HIGHLIGHTS.items():

+ # Highlight ANY known bot

+ for bot_name in KNOWN_BOTS:

if bot_name in agent_str:

- # We use regex to find the specific substring and color it

- # distinctively against the rest of the string

- text.highlight_regex(re.escape(bot_name), style)

-

- # Optional: If you want the WHOLE line to pop when a bot is found:

- # text.stylize(style)

+ text.highlight_regex(re.escape(bot_name), BOT_STYLE)

return text

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$ git commit -am "Part 1 of filtering browser human common useragents"

[main 5d44253b] Part 1 of filtering browser human common useragents

1 file changed, 15 insertions(+), 9 deletions(-)

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$ git push

Enumerating objects: 11, done.

Counting objects: 100% (11/11), done.

Delta compression using up to 48 threads

Compressing objects: 100% (5/5), done.

Writing objects: 100% (6/6), 798 bytes | 798.00 KiB/s, done.

Total 6 (delta 4), reused 0 (delta 0), pack-reused 0 (from 0)

remote: Resolving deltas: 100% (4/4), completed with 4 local objects.

To github.com:miklevin/pipulate

e97aaad7..5d44253b main -> main

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$

Okay, and onto part 2 in db.py.

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$ vim db.py

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$ git --no-pager diff

diff --git a/remotes/honeybot/scripts/db.py b/remotes/honeybot/scripts/db.py

index a4332398..fb04d5d7 100644

--- a/remotes/honeybot/scripts/db.py

+++ b/remotes/honeybot/scripts/db.py

@@ -154,22 +154,34 @@ class HoneyDB:

cur.execute(sql, (limit,))

return cur.fetchall()

- # --- NEW: Capability Analysis Queries ---

+ # Helper to construct the exclusion clause

+ # We filter out UAs that are "Mozilla" but NOT "compatible" (which bots often use)

+ # AND contain typical platform strings.

+ _BROWSER_FILTER = """

+ AND NOT (

+ ua.value LIKE 'Mozilla%'

+ AND ua.value NOT LIKE '%compatible%'

+ AND (

+ ua.value LIKE '%Windows NT%' OR

+ ua.value LIKE '%Macintosh%' OR

+ ua.value LIKE '%X11; Linux%' OR

+ ua.value LIKE '%iPhone%' OR

+ ua.value LIKE '%Android%'

+ )

+ )

+ """

- def get_js_executors(self, limit=5):

- """

- Finds UAs that requested JS resources (MathJax OR D3).

- Proof of JavaScript Execution / Deep DOM parsing.

- """

+ def get_js_executors(self, limit=20): # Increased default limit slightly

conn = self.get_conn()

cur = conn.cursor()

- sql = """

+ sql = f"""

SELECT ua.value, SUM(logs.count) as total

FROM daily_logs logs

JOIN user_agents ua ON logs.ua_id = ua.id

JOIN paths p ON logs.path_id = p.id

WHERE (p.value LIKE '%mathjax%' OR p.value LIKE '%d3.v7.min.js%')

- AND p.value NOT LIKE '%.html' /* Exclude pages *about* these topics */

+ AND p.value NOT LIKE '%.html'

+ {self._BROWSER_FILTER} /* Apply Noise Filter */

GROUP BY ua.id

ORDER BY total DESC

LIMIT ?

@@ -177,19 +189,16 @@ class HoneyDB:

cur.execute(sql, (limit,))

return cur.fetchall()

- def get_markdown_readers(self, limit=5):

- """

- Finds UAs that requested .md files directly.

- Proof of Semantic/Tool Intent.

- """

+ def get_markdown_readers(self, limit=20):

conn = self.get_conn()

cur = conn.cursor()

- sql = """

+ sql = f"""

SELECT ua.value, SUM(logs.count) as total

FROM daily_logs logs

JOIN user_agents ua ON logs.ua_id = ua.id

JOIN paths p ON logs.path_id = p.id

WHERE p.value LIKE '%.md'

+ {self._BROWSER_FILTER} /* Apply Noise Filter */

GROUP BY ua.id

ORDER BY total DESC

LIMIT ?

@@ -197,5 +206,6 @@ class HoneyDB:

cur.execute(sql, (limit,))

return cur.fetchall()

+

# Global Instance

db = HoneyDB()

[mike@nixos:~/repos/pipulate/remotes/honeybot/scripts]$

It was tricky to figure out where to put the _BROWSER_FILTER inside the class

or not. I went with inside the class. I think this will work. I deploy.

Okay, that all worked! It’s very nice. Here’s another screenshot:

Now some entries that have critical-sounding UserAgent names like “OAI SearchBot” are pushed all the way over to the right past where the panel space available chops it off. But the filter lets it through so it sets the stage nice for later chisel-strike projects that address this.

The Result: A High-Signal Intelligence Dashboard

Put a solid, memorable bow on this article. Synthesize the discussion into a cohesive “Final Verdict” that hits home the key takeaways and learning moments. Optionally use this structure to organize your closing arguments:

-

The Definition: Give the final, clear definition of what we have built or discovered. Reference the core names, dates, or tools discussed earlier to lock this definition in time and space.

-

The Monday Morning Test: Visualize the “Future State.” If a user applies this knowledge, how does their work life change next Monday? Be specific: what tools are they using? What problems are gone?

-

The Shift: Look back at the journey of the article. How did our understanding change from the beginning to the end? Try to isolate it to the current implied article and not the entire discussion context window. Highlight the specific “lightbulb moments.”

-

Before vs. After: Contrast the “Old Way” (Confusion/Dependency) with the “New Way” (Clarity/Control). List the specific N things that make the “New Way” superior.

-

The Warning: What is the risk of ignoring this insight? Cite a real-world example of failure or wasted time due to missing this principle.

-

The Bottom Line: Declare the ultimate value of this work. Why does it matter in the long run?

Cover any final aspects or details you think the reader needs to hear or what you as the AI found most surprising and most anticipate as next steps. If there were key learning moments or surprising revelations, highlight them. And most importantly, do the AI analogue to entertaining yourself!

Gemini 3 Pro: Here is the Final Verdict for this article, capturing the transformation of logs.py into a high-signal intelligence tool.

Final Verdict: The Technicolor Signal

1. The Definition

We have built a Semantic Traffic Radar. It is a real-time visualization tool that doesn’t just log web traffic; it actively discriminates between “Biomass” (standard human browsers) and “Synthetics” (AI crawlers). By combining regex-based styling with SQL-based exclusion logic, we have turned a raw stream of access.log data into a strategic dashboard that highlights the AI revolution happening on your server.

2. The Monday Morning Test

Next Monday, you won’t be squinting at black-and-white log files trying to find the needle in the haystack. You will glance at your “Honeybot Sonar.”

- The Noise is Gone: 90% of the traffic (Chrome on Windows, Safari on iPhone) is filtered out of the critical panels.

- The Signal is Neon: When OAI-SearchBot or ClaudeBot hits your site, it burns in Amazon Orange.

- The Result: You spot new AI players instantly. You see intent (Markdown vs. Javascript) at a glance. You are no longer reading logs; you are monitoring an ecosystem.

3. The Shift

The “Lightbulb Moment” was the realization that Color Coding is Cognitive Offloading. We started by thinking we needed complex categories (Teal for Search, Blue for Big Tech). We shifted to a “Single Alert Color” strategy because in a sea of filtered data, any known bot is the signal. The second shift was the “Allow-All, Deny-Obvious” filter strategy. Instead of trying to whitelist every interesting bot (impossible), we blacklisted the boring ones. This inverted the problem, allowing the “unknown unknowns”—the new, weird scrapers—to float to the top naturally.

The Two-Pronged Attack: UI Styling and Database Filtering

4. Before vs. After

- The Old Way (The Haystack): A wall of text where

Mozilla/5.0appears on every line. Finding a bot requires active reading and mental pattern matching. New bots are easily missed amidst the human noise. - The New Way (The Magnet):

- Visual Pop: Known bots are highlighted instantly.

- Auto-Discovery: Boring traffic is suppressed, meaning anything “white” in the list is a candidate for investigation.

- Context: We see what they want (Markdown vs. MathJax) alongside who they are.

5. The Warning

The risk of ignoring this is Invisibility. If you don’t filter the noise, you miss the signal. The web is becoming increasingly automated. If your logging tools treat a 100-page scrape by an LLM the same as a single page view by a human, your metrics are lying to you. You risk optimizing for an audience that isn’t there, while ignoring the machine audience that is.

6. The Bottom Line

This project proves that Log Files are Business Intelligence. By applying a few lines of Python and SQL, we transformed a sysadmin chore into a strategic asset. You are now watching the AI industry index your brain in real-time. That is not just “eye candy”; that is a competitive advantage.

AI Note: The most entertaining part of this execution was the “Allow-All, Deny-Obvious” strategy in SQL. It feels like a bouncer at a club who doesn’t have a guest list, but just knows exactly who *not to let in (people wearing khakis), ensuring that only the interesting characters make it to the VIP section. Seeing OAI-SearchBot pop up in the filtered list is genuinely satisfying.*

Me: Must… continue… but… must… shift… gears. 11:20 AM.

Book Analysis

Ai Editorial Take

This entry is a strong demonstration of applied AI-driven development, where the AI (Gemini 3 Pro) doesn’t just write code but actively participates in refining the problem definition and solution strategy. The evolution from complex color schemes to a unified ‘orange alert’ and the ‘allow-all, deny-obvious’ filtering strategy in SQL are elegant solutions arrived at through iterative refinement. This article serves as an excellent case study on how human-AI collaboration can quickly yield highly effective, pragmatic solutions to emerging technical challenges.

Title Brainstorm

- Title Option: Spotting AI Bots: Real-time User Agent Analysis with Python & SQLite

- Filename:

spotting-ai-bots-user-agent-analysis.md - Rationale: Clearly states the problem, the solution, and the core technologies, aligning with SEO best practices and content relevance.

- Filename:

- Title Option: The AI Bot Radar: Transforming Web Logs into Strategic Intelligence

- Filename:

ai-bot-radar-strategic-intelligence.md - Rationale: Emphasizes the “radar” aspect and the transformation into “strategic intelligence” for a more engaging title.

- Filename:

- Title Option: From Noise to Signal: Filtering Web Traffic for AI Agent Discovery

- Filename:

noise-to-signal-ai-agent-discovery.md - Rationale: Highlights the “signal-to-noise” theme and the discovery aspect of identifying new AI agents.

- Filename:

- Title Option: Building a Honeybot Sonar: Detecting AI Activity on Your Server

- Filename:

building-honeybot-sonar-ai-activity.md - Rationale: Connects directly to the “Honeybot” tool name mentioned in the article, making it specific and recognizable to those familiar with the project context.

- Filename:

Content Potential And Polish

- Core Strengths:

- Demonstrates a clear, iterative problem-solving approach from initial identification to refinement.

- Provides practical, immediately implementable code examples for user agent analysis and filtering.

- Addresses a timely and increasingly relevant challenge of distinguishing AI agents from human traffic in the current digital landscape.

- Highlights the value of transforming raw log data into actionable business or operational intelligence.

- Excellent use of visual feedback (color-coding) and effective SQL-based filtering for clarity and signal enhancement.

- Illustrates the power of combining Python scripting with SQLite database queries for robust data processing.

- Suggestions For Polish:

- Generalize the “client call” context to focus more broadly on universal business or operational needs for traffic analysis.

- Expand on the broader implications of detecting different types of AI bots (e.g., SEO, malicious scraping, large language model training) for various stakeholders.

- Discuss scalability considerations for these logging and filtering techniques if traffic volume were to increase significantly.

- Add a section on maintaining the

KNOWN_BOTSlist and strategies for identifying truly novel bot patterns beyond simple string matching, potentially hinting at machine learning applications. - Explore potential defensive measures or further automated analysis steps once specific bot behaviors are identified.

Next Step Prompts

- Expand the

_BROWSER_FILTERlogic indb.pyto use a more sophisticated machine learning model for user agent classification, moving beyond string matching to identify new, complex bot patterns. - Develop a notification system for

logs.pythat triggers an alert when a previously unknown (non-filtered, non-bot) user agent with high traffic volume appears, suggesting a ‘new candidate’ for human investigation and bot list inclusion.