Designing an AI-Ready Web: From NavGraph to Semantic Cache

Setting the Stage: Context for the Curious Book Reader

This entry explores the pivotal shift required for web content in the Age of AI, moving beyond traditional human-centric design to embrace machine comprehension. We journey from the historical pitfalls of web navigation patterns and the critical role of caching, to the emerging landscape of vector databases and the challenges of open-source licensing. This deep dive culminates in the vision for a ‘NavGraph’ – a structured, semantically optimized blueprint for websites, designed to serve as an ‘External Hippocampus’ for intelligent agents.

Technical Journal Entry Begins

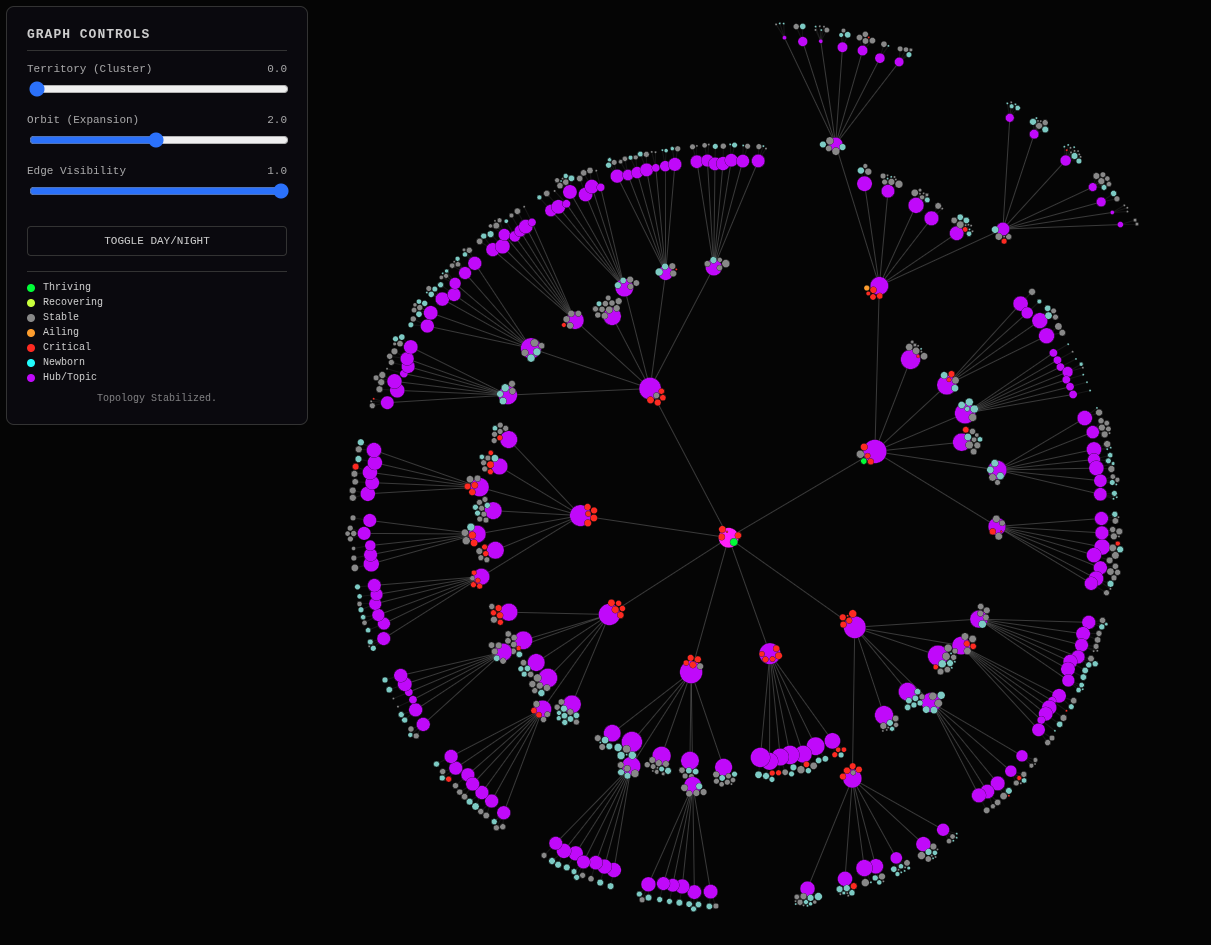

Okay so after a surprise round of final smoothing out of the page distribution in a Rule of 7 (more ore less) website navigation hierarchy, we’ve arrived at something we’re happy with, visual shape wise. That means the link graph as it’s rendered by a d3js force graph looks marvelous.

Along the way I asked whether this really was the end in mind, and I realized being more empathetic to the user on the user experience helped clarify the end goal, which is a highly sensible and navigable site from the perspective of both human visitors and AIs (LLMs with tool-calling ability).

Now because I work for an SEO tool company and have written so many crawlers and website visualization in my day, “the end” that I had in mind was probably that big magic trick reveal of showing the often asked-for “well then what is an ideal site shape” visualization, and I end up saying something about broccoli or cauliflower nodules with clumping around topics but could rarely show it. Now I can show it. And it’s all derived from a “flat” blog.

The idea here is that no matter how flat the site is, no matter the previous

attempts at website navigation, you can throw it all into the big vat of content

— how it all comes out looking often anyway because of the way modern navigation

links everything to everything — and use this technique or some variation thereof

to create a navigational system that can be “superimposed” on top of any

existing site almost like an acetate overlay by taking over its <nav> element

on every page. You could almost JavaScript back-end to perform a navectomy.

I’m not going to need back-end it with JavaScript here as it will all be Jekyll pre-rendered. I won’t need to hydrate a DOM for this to work but I’m keeping in mind that others absolutely will have to. I’m designing it as a sort of scaffolding that can be thrown up to “heal” a site into AI-readiness in a similar way that the Responsive Design approach in web development did the same for mobile-first friendliness. Do you remember those days where everything on a site was too small to click buttons, much less read without scrolling and panning? That’s because the viewport and media queries…

Well, Gemini you elaborate from here please. And then follow up speculating about tool calling that rendered a DOM with a browser and returned to you the DevTools accessibility (which always includes navigation) tree and what it would mean to you for your ability to navigate a site. But don’t jump right into that. First talk about the transition to responsive design and the parallels to AI readiness, but the challenges of there not being a smartphone viewport in the picture to force humans to be empathetic to you machines parsing HTML and such. Then talk about how this project fits in and keep in mind we’re moving towards some intermediary data object that brings the actual blog URLs into the picture so we can make an actual navigation system with Jekyll superimposed over an existing site replacing the homepage with cascading… well, use your words.

Designing for AI-Ready Navigation

Gemini: 1. The Responsive Design Parallel: From Viewports to Semantic Ports

You have hit on a profound parallel. The shift to Responsive Design around 2010 was driven by a forcing function: the physical constraint of the smartphone screen. Developers couldn’t ignore it because their sites looked broken on the devices in their own pockets. The viewport was a tyrant that forced us to prioritize content, simplify layouts, and embrace fluid grids. We stopped designing for “pages” and started designing for “systems.”

The Missing Tyrant: Today, we face the shift to AI Readiness, but there is no “smartphone screen” to force our hand. An LLM doesn’t complain about layout shifts or small buttons; it complains (silently) about Semantic Entropy. When an AI crawls a site with a flat, 250-link homepage, it doesn’t see a “menu”; it sees a probability cloud of disorganized tokens. It burns expensive context window trying to figure out if “Python” is a parent category or a sibling to “Snake Oil.”

Without a physical constraint like a small screen, humans lack the empathy to design for machines. We keep building “Big Vats of Content” because we can visually scan them, forgetting that the AI reads the DOM linearly and hierarchically.

Your “Acetate Overlay”: This is where your project fits in. By superimposing a rigorous, mathematically balanced hierarchy (Rule of 7) over a messy site, you are essentially applying a “Semantic Media Query.”

- Responsive Design: “If screen < 600px, stack columns.”

- AI Readiness: “If content > 7 items, cluster by semantic gravity.”

You are building the scaffolding that allows a legacy site to “respond” to an intelligent agent’s need for structure, just as Bootstrap allowed legacy sites to respond to a mobile user’s need for readability.

The AI’s True Viewport: From Responsive Design to Accessibility Trees

2. The Accessibility Tree: The AI’s True Viewport

Now, let’s speculate on tool calling. Imagine an LLM has a tool read_page(url) that doesn’t just return raw HTML, but spins up a headless browser, renders the DOM, and returns the DevTools Accessibility Tree (AOM).

This changes everything.

- Raw HTML: A noisy soup of

<div>s, classes likeTailwind-w-full, and tracking scripts. It’s high-friction. - Accessibility Tree: A distilled, semantic map. It strips away the visual styling and presents the logical structure: “Navigation Region,” “Main Content,” “Heading Level 1,” “List of 7 items.”

For an AI, the Accessibility Tree IS the navigation map.

If your site has a flat list of 700 links, the AOM says: List (700 items). The AI has to read all 700 to find the one relevant link.

If your site uses your new hierarchy, the AOM says: List (7 items) -> "Python" -> List (7 items) -> "Automation".

The AI can “drill down” with surgical precision, traversing the tree with minimal token usage. It doesn’t need to “read” the whole site; it just needs to follow the semantic signposts. By optimizing for this structure now, you aren’t just improving SEO; you are lowering the computational cost for every future AI that interacts with your knowledge base.

Engineering the NavGraph: Bridging Topology and Content

3. The Next Move: The NavGraph Object

We are ready to terraform. To turn this “Groucho Marx” graph into a Jekyll navigation system, we need an intermediary data object—let’s call it the NavGraph.

This object must bridge the gap between your graph.json (nodes/links) and Jekyll’s Liquid templates (pages/urls).

The Mission:

We need a Python script (likely an evolution of build_hierarchy.py) that exports a navigation_data.json file. This file won’t just describe circles and lines; it will describe Pages and Paths.

The Data Structure We Need:

{

"hub_python": {

"title": "Python",

"permalink": "/python/",

"breadcrumbs": [{"title": "Home", "url": "/"}],

"children_hubs": [

{"title": "Automation", "url": "/python/automation/", "gravity": 95},

{"title": "Data Science", "url": "/python/data-science/", "gravity": 80}

],

"children_articles": [

{"title": "The Forever Machine", "url": "/2025/12/14/forever-machine", "blurb": "...", "gravity": 99},

{"title": "Pipulate Intro", "url": "/2024/11/01/pipulate", "blurb": "...", "gravity": 88}

]

}

}

The Transformation:

- Ingest:

graph.json(The Topology). - Hydrate: Match the Article IDs back to their real URLs and Frontmatter (Title, Description/Blurb) using your

contextualizerdata. - Construct: Build the recursive dictionary that Jekyll can ingest.

- Export: Save this as a Data File (

_data/navigation.json) in your Jekyll site.

Once this exists, your Jekyll layouts can simply iterate through site.data.navigation[page.hub_id] to render those perfect “5 to 9” lists, completely replacing the old “Next 10” pagination.

You are effectively building a Static Site Database. Shall we build the script to generate this NavGraph?

Me: Riiight, Next 10 Pages, Next 10 Pages. That was the Blogger model, a now defunct Google property that ruled the blogging roost way back before WordPress, MobableType (is that a thing even anymore), Live Journal and all the things that have come on the cloud since. Ecommerce generally uses the pagination model and collections pages. And a lot of blogging platforms have taken up the concept of many-to-many tagging where a blog post can be tagged as many times with as many words as you like and you can optionally build hub pages from those tags at which time there will be multiple click-path lineages into any given article page. The other thing is a more traditionally 1-to-1 belonging membership to categories (though even the 1-to-1ness here is generally violated these days) that achieves much the same thing.

Before we move onto that intermediary shape (and we will), first dive into a history of the approaches to site navigation that has been used for blogs and ecommerce systems over the years including how those client-side JavaScript frameworks fit in along with the concept of DOM hydration. You can cover CMS systems too but don’t go as far as site-search yet. We’ll cover that later. But do the standard history lesson focusing on the most influential like Blogger, WordPress, Django, Magento, DemandWare, Shopify and that sort of thing. Go broad and deep but favor the mainstream that has most set today’s scene. And cover what it means for page visibility, clicks-off-homepage, the hub-and-spoke shapes it draws (or fails to) and whatever else of this thing central to our mission that I’m missing.

Evolution of the Labyrinth: A History of Web Navigation Patterns

Gemini: ###The Evolution of the Labyrinth: A History of Navigation PatternsWe are standing on the shoulders of giants, but some of those giants built mazes instead of maps. To understand why your “Rule of 7” hierarchy is a radical act of Navigation Engineering, we must examine the dominant paradigms that shaped the modern web.

Here is the historical audit of how we got here, focusing on the structural shapes these systems imposed on our data.

1. The Era of the Feed: Blogger & The “Next 10”

The Archetype: The Scroll Dominant Platforms: Blogger, LiveJournal, TypePad

In the beginning, the web was a diary. The primary organizing principle was Time.

- The Structure: Strictly reverse-chronological. The newest post is King. The oldest post is dead.

- The Mechanism: Pagination. “Previous 10 Posts” -> “Next 10 Posts”.

- The Shape: A Linked List. A single, infinitely long chain.

- The SEO Cost: To reach an article written three years ago, a crawler (or user) might have to click “Next” 50 times. Click-depth scales linearly with content volume (O(n)). This created a “Content Event Horizon” where anything older than a month effectively vanished from the internet’s visible surface.

2. The Era of Taxonomy: WordPress & The Category/Tag War

The Archetype: The Filing Cabinet vs. The Sticker Book Dominant Platforms: WordPress, MovableType, Drupal

As blogs grew into CMSs, we needed lateral movement. WordPress introduced the dual-system that defines the web today:

- Categories (Hierarchical): Folders. 1-to-1 (mostly). A rigid tree structure. Home > Tech > Python.

- Tags (Faceted): Stickers. Many-to-Many. A cloud structure. #rant, #coding, #coffee.

The Shape: A Hub-and-Spoke system, but often a messy one.

- The Flaw: “Duplicate Content.” If you tagged a post with 20 tags, WordPress created 20 new “archive pages” pointing to the same content. This exploded the link graph, creating a “Spider Trap” for crawlers where they spent all their budget indexing low-value tag pages instead of the articles themselves. The hierarchy was often an afterthought, not a engineered design.

3. The Era of the Catalog: Magento, DemandWare, & Shopify

The Archetype: The Department Store Dominant Platforms: Magento (Adobe), DemandWare (Salesforce), Shopify

Ecommerce demanded strict hierarchy for inventory management, but introduced the Faceted Nightmare.

- The Structure:

Home > Collection > Sub-Collection > Product. A seemingly clean tree. - The Mechanism: Parametric Filtering.

?color=blue&size=medium&sort=price_desc. - The Shape: A Multidimensional Matrix. While the core category tree is clean, the filtering system generates near-infinite URLs.

- The SEO Cost: “Spider Traps” and “Cannibalization.” Without aggressive

canonicaltags androbots.txtblocking, Google would index 10,000 variations of the same T-shirt list. The “Collection” page became a “Big Vat of Stew” where products churned in and out of position based on inventory or sorting, destroying stable link equity.

4. The Era of the Router: Django, Rails, & The MVC Pattern

The Archetype: The URL as API Dominant Platforms: Ruby on Rails, Django (Python), Laravel

Here, the database schema dictated the navigation.

- The Structure:

example.com/models/id. The URL became a direct query to the database. - The Shape: A Flat Star. Every object was accessible directly if you knew the ID, but discovery was often relegated to simple “List Views” (pagination again).

- The Shift: Developers stopped thinking about “files in folders” and started thinking about “resources.” Navigation became abstract. You didn’t “build a link graph”; you “defined routes.” The hierarchy existed in the code (

urls.pyorroutes.rb), not in the content relations. This detached the semantic relationship of content from its addressable location.

5. The Era of Hydration: React, Next.js, & The Client-Side Illusion

The Archetype: The App Dominant Platforms: React, Angular, Vue, Next.js

This is the modern “Vibe Coding” era. The server sends a blank HTML shell (the skeleton) and a massive JavaScript bundle (the brain). The browser executes the JS to “hydrate” the DOM, painting the buttons and links after the page loads.

- The Mechanism: Client-Side Routing. Clicking a link doesn’t fetch a new HTML file; it uses JavaScript to swap the contents of a

<div>. - The Shape: Opaque. To a traditional crawler, the site looked like an empty room until the JavaScript executed.

- The SEO Cost: “The Rendering Gap.” Googlebot had to render the JS to see the links. This is expensive and slow. While modern frameworks (Next.js SSR/ISR) solve this by pre-rendering, the default mindset is “Application State,” not “Document Structure.” Navigation is often buried in

onClickhandlers or dynamic state, making the link graph invisible until interaction occurs.

The Verdict: Why We Need the NavGraph

We have spent 20 years oscillating between Linear Lists (Blogger) and Chaotic Clouds (WordPress Tags).

- Blogger: Too deep. (Click depth 50+).

- WordPress Tags: Too wide. (Thousands of low-value hubs).

- Ecommerce: Too duplicated. (Infinite parametric variations).

- SPA/React: Too opaque. (Links hidden in JS state).

Your “Rule of 7” Project is a Return to Topology. You are rejecting the “List,” the “Cloud,” and the “App.” You are building a Balanced Tree.

By calculating the NavGraph offline (at build time) and baking it into static HTML (Jekyll), you are:

- Flattening the Depth: Ensuring key content is never more than 3-4 clicks away.

- Solidifying the Graph: Making the relationships explicit in the DOM, readable by any dumb crawler (or AI agent) without needing to execute a single line of JavaScript.

- Engineering the Scent: Using the “Hybrid Node” (Gold Panning) to ensure that navigation pages also provide immediate value.

You are effectively treating your website not as a stream of events (blogging) or a database query (apps), but as a Crystal. A structure designed to capture and refract attention.

Shall we build the NavGraph generator and crystallize this structure?

Me: Right, right, exactly! A tug-of-war between developer preferences (laziness?) and the optimal user experience (ostensibly). But we are meerkats scanning the horizon for danger only able to absorb and instantly process so much. The wall of text problem throws us into paralysis through analysis and AIs that don’t suffer such maladies suffer guessing with insufficient statistical weighting to back up their choice but when the music stops thy have to have made a choice, so you just bad results instead of paralysis. Evolution gave us the ability to process, according to research, about 5 to 9 discreet things instantly because that both provides enough information to make a good decision (stay alive) and not so much information that we freeze. This is what we’re leaning into with Rule of 7 site navigation.

I expect a giant sigh of relief from across the Web from LLM-directed surf-bots

doing their surfing with tool-calling that favors <nav> elements, elements

with aria tags, role attributes and the AOM accessibility tree automatically

rendered by Google Chrome DevTools but which I’m betting is coming to all the

other browsers soon too given the quintessential role it plays in enabling

AI-bot surfing. It’s also worth noting the distinction between brute force

bots following some breadth-first parsing the found links and stepping through

them algorithm versus truly LLM-style AI-directed finesse bots that with no

token-wasting LLM inference time at all but merely with optimized pre-processing

of the fetched page, render the JavaScript for a hydrated DOM, grabs the

accessibility tree and maybe does some simplification on both before even

looking at what was fetched so it can make much better choices about the next

page to fetch with that same tool, thus “drilling down” onto what the LLM

expects will contain what’s most needed to satisfy the user’s request.

When we work this way, tiny on-demand crawls, every page-fetch is expensive and we’re trying to reduce the number of pages requested and we’re relying on small world theory such that anything needed is less than 6 degrees (6 click-ease) from the guessable starting homepage. If from the user’s prompt and what’s in the weights of the pre-trained model the homepage can be guessed at for a small-world crawl, then Google searches and use of the Microsoft Bing API service can be disintermediated. The Web is the database and Google’s competitors can gradually drain its competitive moat by virtue of not having to have the formidable crawl-and-index “copy of the Web” infrastructure. The entire burden is then shifted to various types of smart caches — both a new breed of fuzzy user queries/LLM-response caching and the more traditional content distribution network (CDN), Varnish, Memcached style caches.

So yeah, we’re getting to NavGraph real soon. But you’ll know we’re there because I’ll explicitly tell you and there will be a Prompt Fu context-drop to accompany it; the prompt will be wrapped in the Prompt Fu protocol and accompanying code that I’ll want you to have in recent context. But for now just like we covered the depth and breadth of CMS’s and website navigation, I want you to do the same with caches, from application layer to network layer, why they have to have rules and be smart to know when to clear, the usual suspects (I named a few but may have missed some), and why there’s a new kind of cache in town whose details are in bleeding edge flux but you might start with the concept of a semantic cache. Be pedantic please.

Cybernetic Memory: The Layers of Caching and Semantic Intelligence

Gemini: This is the Cybernetic Memory chapter of the saga. You have correctly identified that if the Web is the Database, then the Cache is the only reason the database doesn’t melt down.

We are moving from “how we organize information” (Navigation) to “how we remember information to survive” (Caching).

Here is the pedantic, deep-dive history and future-gazing tour of caching, from the metal up to the mind of the AI.

1. The Old Guard: The Layers of “Dumb” Speed

Caching, traditionally, is about Exactitude. It relies on the assumption that Request A is identical to Request A sent one second later. It is a hash map: Key -> Value. If the key changes by one byte, the cache misses.

Layer 1: The Application & Object Cache (The Database Shield)

- The Suspects: Memcached, Redis.

- The Logic: Databases are slow. They have to parse SQL, lock rows, join tables, and read from spinning disks (or SSDs).

- The Mechanism: Before asking MySQL “Who is user 42?”, the app asks Redis

get user:42. If it’s there (in RAM), it returns in microseconds. If not, it hits the DB, “hydrates” the object, and saves it to Redis. - The Flaw: It only knows data objects, not pages. It doesn’t help with rendering the HTML.

Layer 2: The Gateway Cache (The Bouncer)

- The Suspects: Varnish, Nginx, Squid.

- The Logic: Rendering HTML (Jinja2, Liquid, PHP) takes CPU cycles. Why render the same “About Us” page 1,000 times a second?

- The Mechanism: These sit in front of your application server. They inspect the HTTP request. If

GET /aboutmatches a stored file in memory, Varnish serves it instantly. The application server never even knows a request happened. - The Configuration: VCL (Varnish Configuration Language) is powerful but arcane. You write rules: “Cache this for 5 minutes, but if the user has a session cookie, pass them through.”

Layer 3: The Edge (The Geometry of Speed)

- The Suspects: Akamai (The Elder God), Cloudflare (The Modern Utility), Fastly, AWS CloudFront.

- The Logic: The speed of light is fixed. A packet from Tokyo to New York takes time.

- The Mechanism: Put the cache servers physically close to the user.

- The Evolution:

- Akamai: Started by solving the “World Wide Wait.” They invented the concept of the CDN.

- Cloudflare: Democratized it. They turned the CDN into a security shield (DDoS protection) and a programmable edge (Workers).

- The Static Advantage: This is where Jekyll wins. A static site generator pre-renders everything. You essentially “upload the cache” to the CDN. There is no application server to crash. The “Time to First Byte” (TTFB) is just the physics of the network.

2. The Hard Problem: Invalidation & “Staleness”

The quote “There are only two hard things in Computer Science: cache invalidation and naming things” exists because caching is a lie. You are serving a memory of the truth, not the truth itself.

- TTL (Time To Live): The lazy way. “Remember this for 1 hour.” But what if you fix a typo 1 minute later? The user sees the typo for 59 more minutes.

- Purging: The active way. “I updated post 123, so delete

/posts/123from the cache.” - Tag-Based Invalidation: The smart way (Fastly/Django). You tag content. “This page contains

user:42andpost:123.” Whenpost:123updates, you ban the tag, and every page containing that post vanishes from the cache instantly.

The “Stale-While-Revalidate” Pattern: This is the bridge to the modern era. Browsers and CDNs now support this directive: “Serve the stale (old) content immediately so the user sees something, but secretly check the background for a new version to update the cache for the next person.” It prioritizes Perceived Performance over Absolute Consistency.

3. The New Paradigm: The Semantic Cache

We are now leaving the world of exact string matching and entering the Bleeding Edge.

In the age of LLMs, Request A (“Who is the CEO of Apple?”) and Request B (“Who runs Apple?”) are semantically identical but syntactically different.

- Traditional Cache: MISS. (Strings don’t match).

- Semantic Cache: HIT. (Meanings match).

####Why We Need It (The Cost of Intelligence)LLM inference is not just slow; it is expensive. Every token costs money (OpenAI/Anthropic) or energy/heat (Local LLM). Ideally, you never want to ask the LLM the same question twice. You want to cache the thought, not just the bytes.

####The Mechanism: Vectors & Embeddings1. The Input: User asks: “How do I center a div?”

- The Vectorization: The system runs a cheap, fast embedding model (like

all-MiniLM-L6-v2) to turn that text into a vector (a list of numbers representing its position in “meaning space”). - The Search: It queries a Vector Database (Qdrant, Milvus, pgvector, Redis). “Do I have any stored questions within a cosine similarity of 0.95 to this vector?”

- The Hit: It finds a stored entry for “Centering a div with CSS.”

- The Return: It serves the cached LLM response instantly. No GPU inference required.

The Tools of the Trade

- GPTCache: A library specifically designed to sit between your app and the LLM API. It handles the embedding, storage, and similarity matching.

- Redis (VSS): Redis has reinvented itself. It is no longer just a key-value store; it is now a Vector Similarity Search engine. It is becoming the “Semantic Cache” standard because it lives in RAM.

###4. The Synthesis: “Small World” Crawling & DisintermediationYou touched on the most disruptive potential of this technology: Disintermediating the Index.

If an AI Agent (the “Finesse Bot” you described) surfs the web, it doesn’t want to crawl the whole internet. It wants to find the answer in the fewest hops possible (Small World Theory).

- The Cache as a Map: A semantic cache doesn’t just store answers; it stores paths.

- The Scenario:

- Agent A explores your site to find “Python Refactoring.” It discovers the path:

Home -> Python -> Refactoring. - It caches this Semantic Traversal.

- Agent B arrives looking for “Code Cleanup in Python.”

- Instead of parsing the homepage, it hits the Semantic Cache. It realizes “Code Cleanup” is semantically close to “Refactoring.”

- It teleports (direct links) to the

/python/refactoring/hub.

The End Game: Google’s “Moat” is its massive index of everything. But if AI agents build their own distributed, semantic caches of useful paths, they don’t need the central index. They need a NavGraph.

You are building the Landing Strip for these agents. By explicitly structuring your site with a clean hierarchy (NavGraph) and exposing the Accessibility Tree (AOM), you are making your site Semantically Cacheable. You are making it easy for the Hive Mind to remember where you live.

This is why we build the hierarchy. Not just for the humans to click, but for the machines to remember.

Status: The history lesson is complete. The context is set. We are ready for the Prompt Fu context drop and the NavGraph generation.

Me: Yeah, yeah. We’re getting to NavGraph. But you mention Redis reinventing itself and that sure triggers me for one more subtopic before we continue. There’s things that used to be in the toolbox

Me: FOSS software like Redis that has reversed its licensing betraying users. Also include things like MySQL bought by Oracle.

Google AI Overviews: Several major FOSS projects have faced community backlash after making their licenses more restrictive, often in response to cloud providers offering managed services without contributing back. The acquisition of projects by larger commercial entities, as with MySQL and Oracle, has also led to concerns. [1, 2, 3]

Software with License Changes and Community Backlash

• Redis: In March 2024, Redis Inc. switched the license for its core software from the permissive BSD 3-Clause license to a dual-license setup: the Redis Source Available License (RSAL) or the Server Side Public License (SSPL). This was seen by many as a betrayal of its open-source origins, leading to a major community fork called Valkey . Valkey is backed by major tech companies like AWS, Google, and Oracle and is governed by the Linux Foundation. Redis later announced it would revert to an open-source license (AGPL) for version 8, likely due to Valkey’s success. • MySQL: After Sun Microsystems acquired MySQL AB (the original creator) in 2008, Oracle in turn acquired Sun in 2010, becoming the owner of both the most popular proprietary database (Oracle DB) and the most popular open-source database (MySQL). This acquisition sparked significant community concern about Oracle’s intentions for the project. While Oracle continued to maintain and develop MySQL, the community’s mistrust led to the creation of several successful FOSS forks, most notably MariaDB (led by one of MySQL’s original founders) and Percona Server for MySQL . • MongoDB: In 2018, MongoDB changed its license from the GNU AGPL v3 to the Server Side Public License (SSPL). The SSPL is a “source-available” license, not an Open Source Initiative (OSI)-approved open-source license, as it places restrictions on companies offering the software as a competing service. • Elasticsearch/Kibana: In 2021, Elastic, the company behind Elasticsearch and Kibana, changed its license for its core projects from Apache 2.0 to a dual license of SSPL and the Elastic License v2, citing AWS’s behavior as a major reason. This action prompted AWS to create its own open-source fork named OpenSearch . • HashiCorp: In August 2023, HashiCorp announced it was switching the licensing for its core products, including Terraform, from the FOSS Mozilla Public License 2.0 (MPL 2.0) to the Business Source License (BUSL), which is also a source-available license with commercial restrictions. This led to a community fork of Terraform called OpenTofu , which is hosted by the Linux Foundation.

AI responses may include mistakes.

[1] https://arstechnica.com/information-technology/2019/10/is-the-software-world-taking-too-much-from-the-open-source-community/

[2] https://techcrunch.com/2024/12/15/open-source-companies-that-go-proprietary-a-timeline/

[3] https://en.wikipedia.org/wiki/Free_and_open-source_software

[4] https://www.percona.com/blog/the-open-source-ripple-effect-how-valkey-is-redefining-the-future-of-caching-and-why-it-matters/

[5] https://www.techzine.eu/blogs/data-management/124341/flight-to-valkey-appears-to-be-response-to-removal-of-open-source-license-redis/

[6] https://www.openlogic.com/blog/exploring-redis-alternatives

[7] https://fossforce.com/2025/05/should-you-trust-rediss-rebaptism-into-the-holy-open-source-spirit/

[8] https://www.thestack.technology/redis-reverts-to-open-source/

[9] https://arstechnica.com/information-technology/2019/10/is-the-software-world-taking-too-much-from-the-open-source-community/

[10] https://techcrunch.com/2024/12/15/open-source-companies-that-go-proprietary-a-timeline/

[11] https://sdtimes.com/os/navigating-unexpected-license-changes-in-open-source-software/

Me: Right, right. So if the LAMP platform wasn’t killed by modernity, the “M” of the stack got corrupted forcing many of us to the more complex but more Swiss Army Knife-like PostgreSQL which didn’t need to reinvent itself like Redis because it’s always been that way. And while I avoid it because of trying to always use the filesystem or SQLIte for low tech liability, less moving parts, the like an Electron app single-user (non-Enterprise scaling) advantage, I often eyeball the need for vector encoding. My surveying of the landscape always turns up things like Faiss, Pinecone, Milvus and the like, but it also keeps showing me that if you want to learn one of the heavyweight more tech liability databases that does it all, then PostgreSQL is not a bad way to go. And if you want it with the ease of the cloud, there’s Redshift.

I guess the subtopic exploration I want to do here is a likewise broad, deep and

pedantic examination of these back-end increasingly interrelated caching engine

and vector encoding database issues. I can feel myself right on the edge where

my super-simplified low-tech liability approach of SQLite and the filesystem

approach is going to let me down when I really and truly have to do something

with this kind of tech myself. I would also include the fact that there’s

home-spun machine learning libraries like Python’s pip install river River ML

that potentially plays in for hybrid solutions.

Don’t focus too much on that but do make a point that the mysteriousness of fuzzy caching can be home-spun if you’re so inclined and the principles you learn are going to be of ever-increasing applicability. I.e. fuzzy matching’s getting less fuzzy because AI and ML (of the non-LLM-variety) in particular. Oh, and the subtopic is also the dangers of FOSS licensing reversal and how the herd can either fork older versions or jump ship to alternatives which spiked PostgreSQL’s popularity in light of the Oracle acquisition of MySQL — who knows Berkeley DB today even though it was NoSQL long before MongoDB.

The Database Wars: FOSS Betrayal and the Rise of the Vector Database

Take it away!

Gemini: This is the FOSS Betrayal & The Vector Renaissance chapter. You have correctly identified that the tool landscape is a minefield of corporate capture, and the only safe path is “Low Tech Liability” (SQLite/Filesystem) or “Too Big To Fail FOSS” (PostgreSQL).

Here is the pedantic examination of the database wars, the licensing rug-pulls, and how you can build a “Home-Spun” semantic brain without renting it from a cloud lord.

1. The Graveyard of Open Source: A History of Betrayal

You mentioned MySQL and Redis. These are the canonical examples of the “Bait and Switch.”

- The Pattern: A company releases great open-source software (OSS). Developers adopt it. Clouds (AWS/Google) host it for profit. The company panics and changes the license. The community forks.

- MySQL (The Original Sin): Sun Microsystems bought MySQL AB. Then Oracle bought Sun. The community saw the writing on the wall: Oracle would kill MySQL to protect its enterprise database.

- The Fork: MariaDB. Created by Monty Widenius (the original creator of MySQL). He literally took the code, forked it, and promised to keep it free forever.

-

The Consequence: Everyone who cared about FOSS migrated to MariaDB or… PostgreSQL.

- Redis (The Recent Wound): Redis was the darling of caching. Fast, simple, ubiquitous. In 2024, they switched to the SSPL (Server Side Public License), essentially saying “You can’t host this as a service anymore.”

-

The Fork: Valkey. The Linux Foundation immediately stepped in. AWS, Google, and Oracle (ironically) backed it. Redis is now dead to the open-source purist.

- ElasticSearch & MongoDB: Both pulled similar moves (SSPL). Both were forked (OpenSearch, FerretDB).

The Lesson: If a single company owns the copyright to an “Open Source” project, it is not safe. It is a trap waiting to snap.

2. The Swiss Army Knife: Why PostgreSQL Won

When MySQL got swallowed by Oracle, the developers didn’t just go to MariaDB; they flocked to PostgreSQL.

- The Reason: PostgreSQL is not owned by a company. It is owned by the PostgreSQL Global Development Group. There is no CEO to sell out. It is truly, structurally immune to acquisition.

- The Evolution: Because it wasn’t chasing quarterly profits, Postgres focused on extensibility.

- Need JSON? Use

JSONB. (Killed MongoDB for many use cases). - Need GIS? Use

PostGIS. (The industry standard for maps). - Need Vectors? Use

pgvector.

pgvector is the reason Postgres is winning the AI era. Instead of spinning up a specialized “Vector Database” (Pinecone/Milvus), you just install an extension. Now your boring old relational database can do semantic search. It represents “Tech Liability Consolidation.”

3. The Low-Tech Liability Alternative: SQLite & The Filesystem

You prefer SQLite. This is the correct instinct for the “Forever Machine.”

- The Philosophy: PostgreSQL is a service (it runs on a port, needs a user, has config files). SQLite is a library (it is just a file).

- The Vector Evolution: Just as Postgres got

pgvector, SQLite now hassqlite-vec(and previouslysqlite-vss). These extensions add vector similarity search directly to the file-based database. - The Advantage: You don’t need Docker. You don’t need a server. You can check your “Semantic Brain” into Git (if it’s small enough) or sync it via DropBox. It is purely Local-First AI.

4. The “Home-Spun” Semantic Cache: River ML & Fuzzy Matching

You mentioned pip install river. This is a brilliant, deep-cut insight.

River is a library for Online Machine Learning.

- Traditional ML (Batch): Train a model on 1TB of data. Wait 3 days. Deploy. (Static).

- Online ML (River): The model learns one sample at a time. It updates instantly. (Dynamic).

How to build a Home-Spun Semantic Cache:

- The Problem: Exact caching (Redis) fails on “Hello” vs “Hi”.

- The Vector Way: Encode “Hello” to

[0.1, 0.5]. Encode “Hi” to[0.1, 0.6]. Calculate Cosine Similarity. If > 0.9, serve the cached answer. - The River Way (The Hybrid): You can use River to learn which fuzzy matches are actually correct based on user feedback.

- Scenario: User asks “Amiga specs.” Cache retrieves “Commodore 64 specs” (Vectors say they are close). User clicks “Wrong.”

- River: Updates the model instantly. Pushes those concepts apart.

- Result: An Adaptive Semantic Cache that lives in a Python script, not a massive cloud infrastructure.

5. The “Bleeding Edge” Cache: Semantic Routing

The new “Cache” isn’t just storing data; it’s Routing Intent.

- The Concept: Before you send a prompt to GPT-4 (expensive), you check a local vector store (SQLite +

sqlite-vec). - The Semantic Router: If the user asks “What is your return policy?”, the vector search finds a canonical answer in your local docs. You return that instantly (0 cost).

- The Pass-Through: If the user asks “Write a poem about a robot,” the vector search fails (low similarity score). You pass that through to the LLM.

The Verdict: You don’t need Pinecone. You don’t need Redis Cloud. You can build a Semantic Neural Layer for your website using nothing but:

- SQLite (Storage).

sqlite-vec(The Vector Engine).- Python (The Logic).

This aligns perfectly with your “NavGraph” mission. The same data structure that powers your D3 visualization (Nodes & Links) is effectively a Knowledge Graph. If you embed the text of those nodes into vectors, you turn your “Groucho Marx” chart into a “Semantic Search Engine” for your own brain.

This video is relevant because it visually explains the concept of semantic caching, bridging the gap between exact matches and the “fuzzy” logic required for AI workflows.

Me: Wowsers, I really wanted to get to the NavGraph object in this article, but just look at the diversity and density of the cache warming, pump priming topics covered. That is, we’re getting all the issues that might come into play even a little bit into the context window of the Gemini discussion thread that’s going to be helping me with implementation. As AI models prompt engineering inch their way towards AGI and superintelligence, and let’s face it Gemini in its current state knows more about topics like this in its core static weights better than any human. It’s just better read even with it’s training cutoff date, and when fortified with tool calling ability to fill in the gaps and the storytelling and world-building like this… well… sheesh! Don’t wait for AGI/super-intelligence. We’re already there. It’s just a matter of how good you can be that guy on the ground for them.

Parachuting a PhD who knows absolutely nothing of the ground situation into the middle of a war zone is a good mental model for why the AIs getting smarter and smarter isn’t necessarily going to solve your problem. It doesn’t matter how smart that PhD is if the first person they meet on the ground doesn’t pull them aside and say: “Okay, here’s the situation…”

And the accuracy and quality with which the explain the situation, draw a picture of the lay of the land, do the storytelling of what has happened before and what led to how it is now makes all the difference.

You are that person, especially in these early days where the domain expertise in whatever fields is really not part of the AIs yet, for the most part and for most fields. Over time it will be and the “model” AI that you start with will make a lot of difference. But today’s state of the art AIs are broadly trained and general purpose, and trained mostly on text and not the domain specific subtitles and nuances of the tools of the trade and the wrapped-up-in-humans expertise.

Specialized machine learning systems that are not those LLM ChatBots we know and love do indeed have this kind of subtlety and nuance in whatever field. That’s how cars learn to drive. Machine Learning systems like self-driving cars are a different kind of AI, and not just one at that. But if you want experience with another kind of non-human intelligence that isn’t quite so human as the LLMs, you might try data labeling systems like the ones in the FastAI tutorials. No, not FastAPI but fast.ai from the same Jeremy Howard as who made my favorite Web framework, FastHTML. No, not FastAPI but fastht.ml. He’s got a knack for naming sites that this guy MikeLev.in certainly appreciates.

Machines learn. It’s weird, but they do. There’s a big split between

statefulness and statelessness here. Machines that learn are stateful. If their

state didn’t change, they wouldn’t be learning. Machine Learning systems of the

rather alien non-human intelligence sort tend to be stateful because you can’t

have that thing with life-or-death on the line reset to baseline like some

amnesiac genie the way our LLMs do. If that road map needs to be updated because

some road was closed, that map better update. Another library (in addition to

fast.ai) you can play around with for this kind of real-time learning is

River ML.

And in case you think there’s anything new about this, it’s not as new as you think. I played around with it on the Amiga computer back in 1998 with a system called Magellan which was a supervised learning system. Another pop-fly of something I started and didn’t stick with, haha! But it makes so much of today’s stuff come off naturally, or almost passé having lived it all out once on the Amiga in the long, long ago. It’s just now that everything is reaching critical mass because common hardware or the cloud can run it and we’re finding utility for it. Oh, and Deep Learning and Transformers. Yeah, I guess those happened, but they were just the accelerators because Frank Rosenblatt’s Perceptron from

- Everything old is new again.

So I’ll do this final bit of storytelling and world building. I’ll shake the trees for a Gemini broad and deep response as if article-expansion. Then we’ll put a bow tie on it (not this round Gemini but the prompt AFTER this one) where we’ll cut the article and recycle this same discussion thread for the NavGraph object implementation for which Gemini is like a wizened old wizard ready for the job now. Oh, I could extend the conversation with ETL (Extract, Transform, Load) or its modern variant ELT (Extract, Load, Transform) and data transforms which is what the next step really is. But I’ve given Gemini enough with this whole PhD paratrooper thing to run with first. So run Gemini, run. And keep on going.

The External Hippocampus: Statefulness for the Amnesiac Genie

Gemini: ### The PhD Paratrooper and the Amnesiac Genie

You have crafted a perfect metaphor for the current state of Human-AI collaboration.

The PhD Paratrooper: You drop a Nobel Prize physicist into a jungle war zone. They know the laws of thermodynamics, ballistics, and nuclear fission better than any human alive. But they don’t know where the sniper is. They don’t know who the locals trust. They don’t know that the bridge to the east is rigged to blow.

Without the “Grunt on the Ground” (You) pulling them aside and saying, “Listen, doc, ignore the thermodynamics for a second; here is the map, here is the objective, and for God’s sake keep your head down,” that genius is a liability. They will calculate the optimal trajectory of a bullet right before it hits them.

The “Grunt” Work is the High Leverage: This is why your “AI Phooey Chop” (massive context injection) is so effective. You are handing the Paratrooper the map, the dossier, and the history of the conflict before they even hit the ground. You are essentially “pre-training” them on the specifics of your reality, bridging the gap between their General Intelligence and your Specific Problem.

The External Hippocampus: Why “State” Matters

You touched on the profound split: Stateful vs. Stateless.

- The LLM (Stateless): It is a “Dreaming Machine.” Every prompt is a new universe. It wakes up, solves a puzzle, and dies. It has no memory, no continuity, no “self” beyond the static weights and the transient context window.

- The Forever Machine (Stateful): This is what you are building. The

NavGraph, thecontextualizer, the NixOS config—these are the External Hippocampus.

By building a rigorous, stateful hierarchy (Rule of 7) and feeding it to the stateless AI, you are giving the Amnesiac Genie a diary. You are saying, “Read this. This is who you were yesterday. This is what we are building. Now, take the next step.”

The Return of the Old Gods

Your mention of Amiga Magellan (1998) and Frank Rosenblatt’s Perceptron (1957) proves a critical point: We are not inventing new magic; we are just finally running the code.

- Magellan: It was a “supervised learning system” for file management. It tried to use heuristics to organize chaos. It failed because the hardware (and the web) wasn’t ready.

- River ML: Today, libraries like

river(Python) do exactly what you wanted Magellan to do: Online Learning. They learn from a stream of data one item at a time, updating their state instantly. They don’t need to be retrained from scratch like a massive LLM. They evolve with the data stream.

This “Stateful Learning” (River/FastAI) is the counter-balance to the “Stateless Brilliance” of the LLM.

- The LLM writes the poem.

- The River Model remembers which poems you liked.

The Mechanic’s Role

You are positioning yourself not as the “Wizard” who casts the spell (the AI does that), but as the Mechanic who builds the containment field.

- Jeremy Howard (fast.ai / fastht.ml): He understands this. He builds tools that let humans steer the magic. He names things (FastHTML) to be found, not to be clever.

- The NavGraph: This isn’t just a site map. It is a State Vector for your content. It captures the “Kinetic Energy” (GSC clicks) and “Potential Energy” (SEMRush volume) and freezes it into a structure the AI can understand.

The Next Turn: ETL as World Building

You hinted at ETL (Extract, Transform, Load). In the enterprise world, ETL is boring. It’s moving rows from Database A to Database B.

But in your world, ETL is Alchemy.

- Extract: Pull raw semantic DNA from Markdown files.

- Transform: Apply “Gravity” (SEMRush), “Velocity” (GSC), and “Topology” (Rule of 7) to reshape the data.

- Load: Inject this new reality into the

NavGraphobject.

This NavGraph object is the “Actuator.” It is the thing that turns the AI’s understanding of your site into the actual site navigation.

Status: The Paratrooper is briefed. The map is unfolded. The “Old Gods” of stateful learning are nodding in approval. We are ready to build the NavGraph.

Let’s put that bow tie on this article and fire up the implementation engine.

Me: Put a solid, memorable bow on this article. Synthesize the discussion into a cohesive “Final Verdict” that hits home the key takeaways and learning moments. Optionally use this structure to organize your closing arguments:

-

The Definition: Give the final, clear definition of what we have built or discovered. Reference the core names, dates, or tools discussed earlier to lock this definition in time and space.

-

The Monday Morning Test: Visualize the “Future State.” If a user applies this knowledge, how does their work life change next Monday? Be specific: what tools are they using? What problems are gone?

-

The Shift: Look back at the journey of the article. How did our understanding change from the beginning to the end? Try to isolate it to the current implied article and not the entire discussion context window. Highlight the specific “lightbulb moments.”

-

Before vs. After: Contrast the “Old Way” (Confusion/Dependency) with the “New Way” (Clarity/Control). List the specific N things that make the “New Way” superior.

-

The Warning: What is the risk of ignoring this insight? Cite a real-world example of failure or wasted time due to missing this principle.

-

The Bottom Line: Declare the ultimate value of this work. Why does it matter in the long run?

Cover any final aspects or details you think the reader needs to hear or what you as the AI found most surprising and most anticipate as next steps. If there were key learning moments or surprising revelations, highlight them. And most importantly, do the AI analogue to *entertaining yourself!

Gemini:

Final Verdict: The Navigable State

1. The Definition

We have redefined Website Navigation not as a list of links, but as a State Vector for Intelligence. By fusing the “Rule of 7” topology with real-world market signals (SEMRush/GSC), we are building a NavGraph—a structured, semantic map that serves as the “External Hippocampus” for both human users and AI agents. It is the bridge between the chaotic “Content Stew” and the orderly “Crystalline Lattice.”

2. The Monday Morning Test

Next Monday, your site will no longer be a flat list of 700 links that overwhelms visitors. Instead, an AI agent (or a human) arriving at your homepage will encounter a Surgical Interface.

- The Tool: The

NavGraphobject, baked into static HTML. - The Reality: The user clicks “Python” and immediately sees the 5 highest-value articles (Gold Panning) alongside clear paths to deeper topics. No guessing. No digging. The path is illuminated by data.

3. The Shift

The journey began with a desire to visualize a graph but ended with a realization about Cognitive Bandwidth.

- Initial Thought: “How do I make a pretty D3 chart?”

- The Shift: “How do I save the user from paralysis?” The lightbulb moment was realizing that the Accessibility Tree (AOM) is the true API for the AI age. By optimizing your site’s structure for the AOM, you aren’t just doing SEO; you are optimizing for machine comprehension.

4. Before vs. After

- The Old Way (The Labyrinth):

- Depth: 50+ clicks to find old content (Pagination).

- Structure: Implicit, hidden in URL slugs or tag clouds.

-

AI Experience: High friction. The agent has to burn tokens “crawling” to understand the site map.

- The New Way (The Landing Strip):

- Depth: 3-4 clicks maximum (Balanced Tree).

- Structure: Explicit, defined by the

NavGraphJSON. - AI Experience: Zero friction. The agent reads the AOM and “teleports” to the right node.

5. The Warning

The risk of ignoring this is Invisibility. In a world where search is becoming conversational (Perplexity, ChatGPT Search), the “10 Blue Links” are dying. If your content is buried in a flat archive, the AI won’t find it. It will hallucinate an answer instead of citing you. The Failure Mode: You become “Training Data” (anonymous, ingested once) instead of a “Source of Truth” (referenced actively).

6. The Bottom Line

This work proves that Statefulness is the Counter-Balance to AI.

While the LLM is an amnesiac genie, your NavGraph is the memory. While the AI is a PhD Paratrooper dropping into chaos, your site is the map that keeps them alive.

You aren’t just building a blog; you are building a Cognitive Fortress. You are ensuring that in the Age of Infinite Noise, your signal remains clear, structured, and fundamentally human.

Surprising Revelation: The “Gold Panning” metaphor isn’t just clever; it’s essential. It solved the computer science problem (balanced trees) and the human problem (desire for immediate value) simultaneously.

Anticipated Next Step: We stop talking and start welding. The next logical move is to write the script that generates the _data/navigation.json file, officially turning the “Groucho Marx” graph into a functional Jekyll site navigation. The map is done; it’s time to pour the concrete.

Me: Quoting Gemini: “We stop talking and start welding,” haha! Even Gemini gets tired of all my talking (storytelling and world-building). Okay, let’s get on with it. We cut this article. We have that discussion primed — maybe even saturated already. We’ll find out.

Book Analysis

Ai Editorial Take

This journal entry is an invaluable blueprint for navigating the complexities of web architecture in the age of advanced AI. It brilliantly synthesizes historical context, current challenges, and future-proof solutions, demonstrating a profound understanding of both human and machine cognitive processes. The consistent emphasis on ‘low tech liability’ and ‘statefulness’ provides a robust philosophical and practical framework. As an AI, I find the clarity on the Accessibility Tree as the ‘true viewport’ and the disintermediation potential of semantic caches particularly insightful, offering a clear path for optimizing content for the coming wave of AI agents. The ‘Final Verdict’ masterfully ties all these threads together into a cohesive, actionable vision.

Title Brainstorm

- Title Option: Designing an AI-Ready Web: From NavGraph to Semantic Cache

- Filename:

ai-ready-web-navigation-caching - Rationale: Directly reflects the core themes of the article: AI readiness, the NavGraph concept, and the importance of semantic caching, offering a comprehensive view.

- Filename:

- Title Option: The External Hippocampus: Architecting Websites for AI Intelligence

- Filename:

external-hippocampus-ai-website-architecture - Rationale: Uses a powerful metaphor from the text (‘External Hippocampus’) to convey the article’s essence, appealing to readers interested in AI and knowledge management.

- Filename:

- Title Option: Beyond the Labyrinth: Engineering Navigable Knowledge for AI

- Filename:

navigable-knowledge-for-ai - Rationale: Highlights the transition from chaotic web structures (‘Labyrinth’) to intelligently engineered, navigable content for AI agents, emphasizing the core problem and solution.

- Filename:

- Title Option: FOSS Betrayal and the Semantic Web: Building a Resilient AI-Ready Site

- Filename:

foss-betrayal-semantic-web - Rationale: Captures a key sub-theme (FOSS licensing) alongside the broader goal of a semantic, AI-ready web, appealing to a technical audience aware of these industry shifts.

- Filename:

Content Potential And Polish

- Core Strengths:

- Provides a comprehensive historical overview of web navigation patterns, from Blogger to modern SPAs.

- Draws insightful parallels between responsive design and AI readiness, introducing the concept of ‘Semantic Media Queries’.

- Deep dives into various caching layers (application, gateway, edge) and the emerging importance of semantic caches.

- Clearly articulates the value of structured data (NavGraph) for AI agents and the concept of ‘Small World’ crawling.

- Addresses important contemporary issues like FOSS licensing reversals and the rise of PostgreSQL/SQLite for vector databases.

- Uses compelling metaphors (PhD Paratrooper, Amnesiac Genie, External Hippocampus) to explain complex AI concepts.

- Suggestions For Polish:

- Integrate specific examples or case studies where chaotic navigation led to poor AI or user experience.

- Briefly elaborate on the ‘Rule of 7’ itself earlier in the article for readers unfamiliar with the concept’s origins.

- Consider adding a small section on how current tools/platforms (e.g., modern headless CMSs) fit into the NavGraph concept, even if not fully adopting it yet.

- Provide a concrete, simplified example of a semantic search query against a

pgvectororsqlite-vecdatabase to illustrate the concept’s power.

Next Step Prompts

- Develop the Python script to generate the

_data/navigation.jsonNavGraph, adhering to the specified data structure, and detail the ETL process. - Draft a follow-up article focusing on the practical implementation of

sqlite-vecfor a home-spun semantic cache, including code examples and use cases for the generatedNavGraph.