Rebooting Site

Getting Serious About Content Creation

Okay, I’ve got to get serious about this tech book.

And I should re-launch my YouTube presence.

And both should take a similar idea-capture, cross-link, transform & sieve approach.

Organic Growth Strategy

Don’t boil the ocean. Go at my own pace, jumping from topic to topic organically as I see fit. Don’t force it. Let it happen naturally.

Just keep the pressure on and never not be providing mouth of the funnel material. It’s the fodder content that gets put in context and perspective with your other content through references and cross-linking, and then just sets the stage for machine learning, pattern recognition, LLMs, AI’s or what have you to come in and make sense of it in different ways and at different times.

That process results in a distilling down, focusing, and variation derivatives of the original content. I query books into existence.

Overcoming Creative Resistance

I have to force myself to take steps that I am often remiss or reticent to do. Remiss in that I just don’t want to. I want to keep things simple. Video editing is one of those things. It’s a huge violation of the 80/20-rule in keeping progress moving forward because it swallows up all your time. But without video editing, your (my) message will never be bottled-up and tight and polished enough for the masses. And reticent because everything I do is a source of competitive advantage in the job market, keeping me sharp and ahead of my peers. I look at what’s taken for Python SEO and I laugh.

Site Reboot in the AI Age

Okay, speaking of which I’ve been rebooting my whole presence. In a way, it’s a violation of basic SEO principles of keeping your good producing URLs unchanging forever, because long-established URLs are gold. Changing the URL is quite literally throwing out gold in the trash in today’s information age. Yet, that’s what I’ve done with my site, and I don’t care. It’s an experimental site and always has been. And so the new experiment is a full reboot in the age of the rise of AI.

The Graphics Dilemma

Graphics? OMG, another thing I’ve been hugely remiss and reticent about doing because it slows down journaling like this and creates stuff that needs to be thought about when I do data transforms of my public work journal distilling it into the future-proofing tech book. The fewer weird things going on in a pure text-source for the queries and transforms, the better. But this is one of those capture-it now-or-never situations. So it’s forcing my hand. Let’s share some graphics.

Fresh Start Strategy

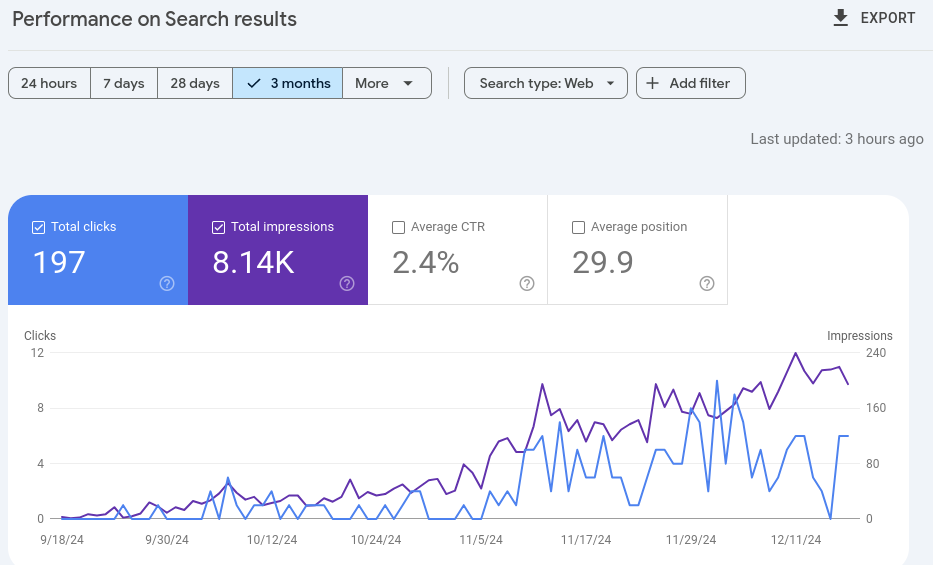

So this is not a “from scratch” site, as MikeLev.in has existed for many years. But I made the decision to basically make a fresh start now with the world changing so dramatically. None of the old topics I covered felt relevant anymore, and the new topic of future-proofing feels like it needs to take center stage. So I wiped it and made a clean slate. No redirects. No preservation of old assets. Nothing. And I let it sit that way for awhile for any legacy performance I had to drop off, and as you can see, my site was pretty much non-existent in search back in September, 2024.

The Post-ChatGPT Journey

Then on September 8th, 2024 I started blogging again. That’s almost but not quite 2 years after the debut of ChatGPT, late October 2022. Two years to let it dawn on me quote how the world has changed, to internalize the meaning and to take inventory of all my technical skills an know-how.

About a year after that, I switched jobs and went to work for Botify. This is part of an aligning of my Ikigai, which I am increasingly talking about here. My book is about future-proofing, but the book is going to emerge organically as a result of my daily work writing, like this.

The Evolution of My Development Environment

I live and think and work in vim. Technically now, it’s NeoVim (or just nvim), but I still consider it vim. I only made the switch because when I wanted to test out the OpenAI-based Microsoft Copilot (now Github Copilot) without being forced onto VSCode, I switched to nvim because it was supported, while vim wasn’t. During that switch I also discovered how much I like writing my automation macros in Lua (vs. VimScript). It was a difficult switch to make intellectually, but muscle-memory-wise it was seamless. It’s a demonstration of how good tool selection lets you preserve the most important and difficult to immediately reproduce personal asset, your habits.

Embracing VSCode Through Cursor AI

I’ve successfully avoided VSCode all these years, really despising the whole directory-diving cluttered zone-busting flow-interrupting interface. Then along came the Cursor AI text editor. I didn’t jump right on the bandwagon. I tested alternatives in NeoVim. I tried Jupyter AI. And to maintain an open mind about it, I tried Cursor AI, and now I’m on a VSCode fork… not as my main thinking/working/being environment (vim/nvim remains that), but for interacting with Anthropic Claude at seemingly unlimited levels at a very fair price. They got my $20/mo prize that all these vendors seem to be seeking. Technically, OpenAI did, and it’s a match made in heaven with Claude 3.5 Sonnet. It’s worth learning VSCode for (technically, a VSCode fork, but same thing).

The AI Assistant Landscape

I’ve been on VSCode before. I tried the Codeium fork for a fully FOSS version, and I’ve tried it native on Mac, Windows and Linux! So I’m coming from a place of having tried to make it work before but failed. I just couldn’t see the value of doing everything the Microsoft way that’s both disruptive to existing timeless memory-muscle habits and which disrupts the flow state. It was a lose/lose proposition on almost every level… but then Cursor AI. Nuance matters. Subtlety matters. And Cursor AI got the nuance and subtlety of an AI code assistant down far better than GitHub Copilot and Juptyer AI.

The FOSS Dilemma

The FOSS open source alternatives to Cursor AI are popping up left and right. But as much as I love FOSS, this is the kind of disjointed red herring rabbit hole fragmentation that I hate. I love vim/NeoVim because it has emerged dominant while still being 80/20-rule good enough for most cases. Learn vim skills and be set for the rest of your life, because everyone emulates vim mode. It’s win/win. But all these different AI code assistants… ugh! You can’t walk away from the benefits, but you can’t invest your future into a particular one.

Finding Balance in the AI Era

And so… and so… a VSCode fork (you can’t go wrong knowing VSCode at this point) with the darling of the tech circles, Cursor AI, with considerable personal experience with different AI code assistants to validate that Cursor AI really is something special. They got the subtlety and nuance right in the interface in the ways that make all the difference. It is that much better than the first on the scene, Copilot, and the one I really in my heart wanted to win, Juptyer AI.

The Future-Proofing Process

And so, I blog. I write and I think and I future-proof. I preserve everything it is about my existing tools and habits that survive the AI-disruption, and I do all the little tweaks, and yes sometimes compromises (it is 80/20 I advocate after all) to directionally adjust my old ways to the new inevitabilities.

Site Reset Results

Resetting my site was one of just those things, and 3 months into it now you can see the effect gloriously in Google Search Console (GSC) in a dropping down to nothing! Nada! Zilch! I have other tests going on with true from-scratch websites, but with this, my personal one, it’s about the future-proofing book.

Writing Process and Philosophy

I write what comes to mind in the sequence it comes to mind. I do it at the beginning of the day, hoping to have guessed the topic of the day’s article correctly, but knowing that I may not. Trying to do it consistently, one article per day, but not being a perfectionist about it. Using it to control my directional decisions, both in my personal and professional life, aligning the topics to what I’m dealing with at work, getting plenty of overlap and keeping the concept of Ikigai in mind.

The Art of SEO

What I love is the use of tools that have become almost like a part of your body for expressiveness in artistic and poetic ways that have some form of practicality to them. So yeah, search engine optimizer (SEO). I guess I will continue to be an SEO because data scientist? Developer? I cringe at the thought of… what, precisely? Not forging my own way? A less artistic canvas to work upon? This is an idea I will have to flesh out more in future posts.

Looking Ahead

I have 2 calls coming up later today that I have to be very prepared for. It gets right to the heart of these issues. Knowing what to do and why.

I have to lean into the strengths of the Botify product more than ever before.

Desktop Environment Choices

I also am going to lean into the Linux GNOME desktop, which is the general default of most distributions these days (over KDE Plasma or some other custom fringe thing). There’s all these decades of hard won muscle memory lessons here. There may be something superior in the fringes with desktops, but if you’re training your habits and it’s not on Mac or Windows, then it better be on GNOME or KDE Plasma. And between the two of those, GNOME is feeling more timeless. KDE just feels like Macs and Windows, I’m sure by design, but that’s a weakness and not a strength. All the best lessons of Mike Shuttleworth from Canonical Ubuntu with the Unity interface experiment have been wrapping back into GNOME making it very professional and muscle-memory habit friendly.

Strategic Transitions

So, it’s an era of carefully chosen transitions for me. A few sure bets and a few gambles. Of those gambles, FastHTML is probably the biggest. I think HTMX, one of the technologies it’s based on a little less so, but the writing’s on the wall, if you dislike heavy-duty JavaScript frameworks and don’t want to take up Vanilla JavaScript and prefer a Python-centric (aka Pythonic) approach, then FastHTML has solved all the problems. It is the logical choice, and we are in the very earliest gestational days of that when people are just realizing what’s being born. I am all over that bandwagon and already have a generalized web framework system based on it with integrated local LLM (much more on that soon).

Cycling In New Pages

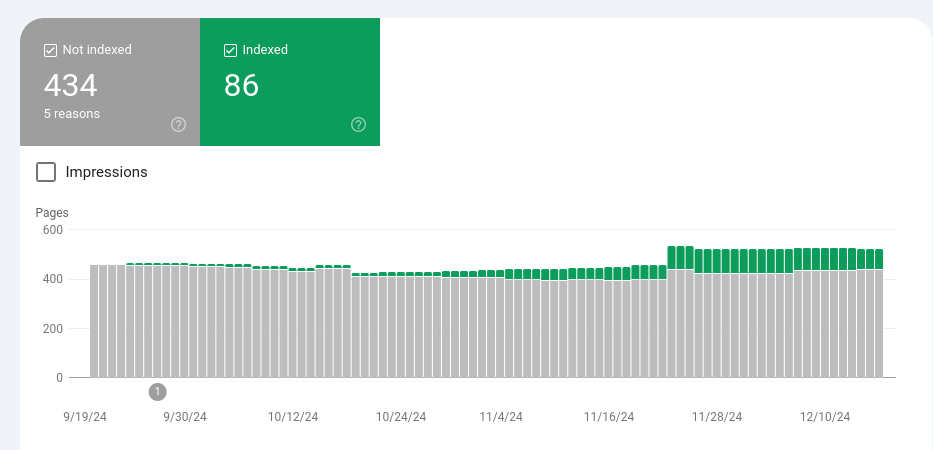

Here’s another image out of my Search Console, showing how I contracted the site down to just a homepage and grew it back out with the blog. There is some notion of the era of the long-tail (that I helped advocate when my HitTail Web 2.0 site was a thing) is dead. The idea is that companies with an identified brand are white-listed and nobody else has a chance. Well, this is a classic writing strategy, but a genuine one where I’m not pandering to search nor trying to make any money off of it. I am just being my genuine self trying to capture something very rare these days, non-AI-generated process. While it’s true that I do speak with AIs in the course of writing these articles, I’m actually framing it as the conversations they are.

Scalpel Like Precision

You can see the scalpel-like precision I’m using here, something that’s often a part of my SEO experiments. It’s hard to carry things out as actual scientific tests with controls and statistically significant samples. However, there are diagnostic procedures that are along the same lines that give you practically the same knowledge (and by extension, abilities) to work with. Here, it is that if I have 85 articles published, there should be 86 pages indexed by Google (blog pages plus homepage). The numbers are a wee bit different now as I’m allowing some “leaf” pages that are not purely blog pages to exist. But nonetheless, this is the setup for some rather glorious demonstrations and assuring myself that I still got it. My numbers may not be big, but my cause/effect control is undeniable.

Monitoring Crawl Budget

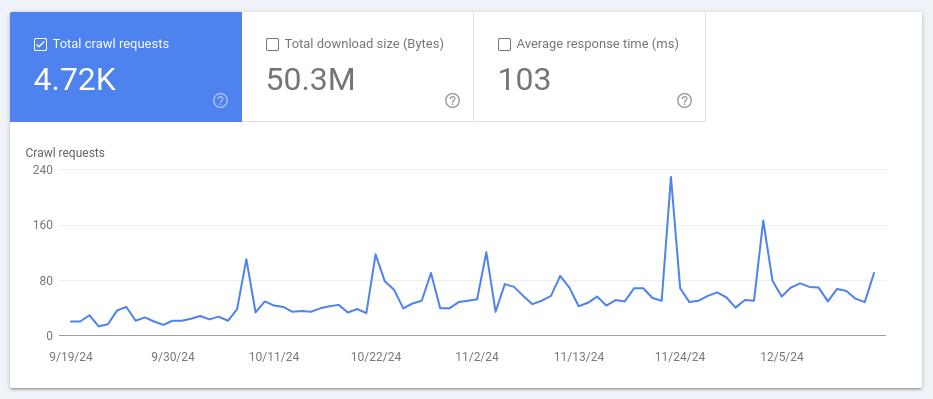

Establishing this cause/effect relationship in the data is the most important thing. Without that, it’s spinning your wheels. With Google Search Console, you’re not limited to just seeing what has already been picked up in the index. You can get a sense for how often Google revisits your site and how many pages it tries to pick up each time. It’s a bit oddly hidden under Settings / Crawl Stats / Open Report, but it’s there. And you can see the picture of the increased crawl budget as the pages count increases, with these occasional spurts to set if it can pick up more, in my case doubling or tripping its number of requested pages, which is conspicuously close to the old number of pages on my site that I retired, indicating a checking of all those now 404’ing pages.

This indicates a split between two different crawling methodologies, one that actually follows links on your site, and another that uses Google’s historic view of your site, checking pages that today would be considered orphaned for lack of a crawl-path in.

Also, it’s interesting to see what the site: search modifiers of Google and Bing (yes, it’s good to do this test under Bing as well) have to say.

- Google site:mikelev.in About 175 results

- Bing site:mikelev.in About 127 results

I have recently moved the blog into a /futureproof/ directory structure

without any 301 redirects, and it’s interesting to watch both engines adjust to

the new structure. Speaking of which, much of this is so that I can test the

next generation of Pipulate against a site I personally control. I think I’m

going to make this development work on Pipulate and the Next Gen SEO work a lot

more visible here. It’s perfect fodder for the future-proofing tech book.

- Creating an entire site with just markdown files

- Leaning on GitHub Pages Jekyll static site generator system to get the site live without WordPress or any of the other usual CMS suspects

- Various querying and transform tricks you can do from the markdown, treating it like a database