The Logistics of Thought: From S-Curves to the Command Line

Setting the Stage: Context for the Curious Book Reader

This entry captures a developer’s real-time thought process while navigating the evolving landscape of AI-powered software development. The central project, “Pipulate,” serves as the practical testbed for a broader philosophical and technical exploration. The author is grappling with how to maintain intellectual clarity and technical sovereignty in an era dominated by large tech platforms and generative AI.

The immediate goal is to investigate Google’s new Gemini CLI (Command-Line Interface), a tool that promises powerful, scriptable AI assistance. This practical task, however, is framed by deeper reflections on mental models—like the logistics S-curve—as tools for clear thinking, and the strategic importance of the command line for automation and avoiding vendor lock-in. For readers unfamiliar with the CLI, it’s a text-based way to interact with a computer, prized by developers for its power and ability to automate tasks, standing in contrast to the graphical interfaces (like Windows or macOS) most users are familiar with.

The Green Squares of Motivation

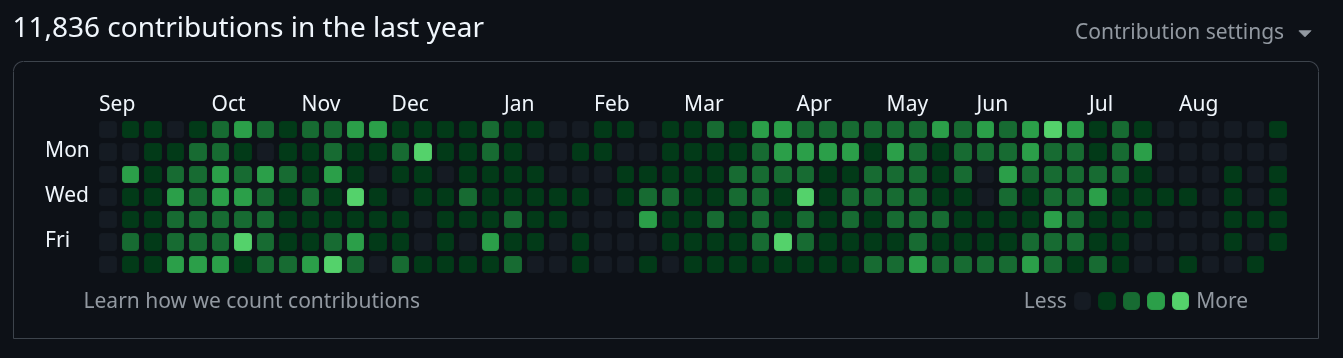

Okay, September is Back to School and I’m getting swept up in the spirit. A lot of times you can tell how engaged in learning and pushing the state of affairs forward by how green is your GitHub profile. When I talk about the summer doldrums and having a lull it shows up there pretty gloriously.

Beyond the Vendor Lock-In: A 1-2-3 Backup Philosophy

…and so when designing your own habits in order to push yourself forward and trying to find the same free-will and agency in yourself that we’re looking for in AIs it might be a good idea to check your GitHub repo activity. Unfortunately this plays directly into a vendor lock-in trap because Microsoft bought GitHub years ago with extraordinary foresight. But rest assured GitHub is not the fabric of the Internet, it’s just a Microsoft subsidized remote git repo which you can detach your repos from at any time and host elsewhere including locally on your own machine. In fact when you do that you don’t even need a git server running — the git program is smart enough to just use your filesystem directly for git pushes.

So your code can be at least 2 places on your local machine using the same habits and tooling you’re using for GitHub. If your main repo and your remote origin are 2 physically separate drives then you have your code in 2 places for that first level of safety. If you have a backup routine on top of that or use a RAID for mirrored drive redundancy then you have a 3rd layer of code safety and you’ve made yourself resistant to data loss through crashed drives. The last remaining part of a good 1, 2, 3 backup plan is to get on more copy off-site to be resistant to loss by fire and such. Most people just put it on GitHub and there’s no harm in that so long as you don’t mind everyone’s AIs learning from your public repos and Microsoft’s potentially even learning from your private ones.

Already this is a stream of consciousness tangent from my purpose but due to the nature of these articles here on MikeLev.in being deliberate fodder to be mined for the future-proofing in the age of AI tech book, it’s always a good time for a stream of consciousness tangent if it serves the purpose of idea-capture. One of the things the magic rolling pin that goes over all these articles is going to do is identify topics like that 1, 2, 3 backup procedure and how GitHub ties in with the Microsoft vendor lock-in and AI training concerns and all. These are the issues of our time.

Clarity in the Noise: Finding Patterns in the Chaos

Maybe the biggest issue of our time is clarity of thought. Because of infinite content generation because of AI, the signal-to-noise ratio is way out of whack. It becomes harder and harder to tell truth from fiction, and it’s more or less always been that way. It’s just that way now at an infinitely accelerating pace. Well not infinitely accelerating, actually. And that’s a good place to begin with clarity of thought. Things are resource-bound. There can only be infinite something if there is no constraining variable such as the population of rabbits expanding to cover all land on Earth.

The S-Curve: Nature’s Blueprint for Growth and Limits

Rabbits don’t cover every inch of the Earth because of at least two constraints:

how much food is available because it can be eaten up at a faster pace than it

is naturally replaced, and how the population of rabbits now supports a larger

population of predators. What would be logarithmic or exponentially increasing

curve hits inflection points and comes back down. That’s the logistics or the

population curve. The logistics curve is shaped somewhat like an s on its side

first going up as if the population will expand forever but as the limiting

factors start factoring in, the curve’s ascent slows and hits an inflection

point and starts coming back down and ultimately leveling off at just the right

balance. It’s called the population curve for hopefully obvious reasons but it’s

also called the logistics curve because it’s also famously effective in

determining just how often Walamart needs to reorder products to stock their

shelves to always sell out and be reordered at just the right pace to never have

to warehouse excess inventory.

From Cells to Stars: The Universal Logistics Curve

Whether you’re a math person or not, thinking about things in terms of these curves is a sign of clear thinking. You can get to first principles of why things are the way they are by thinking for yourself. You use your own observations and you look around and you think it out. The population/logistics curve is everywhere in business, life and all of existence. There are few places this can’t be applied. The Big Bang results in somewhat evenly distributed spreading of matter and energy expanding out. The even distribution starts to lump up according to the rules of reality. First the energy lumps up into matter which is a bunch of more or less evenly distributed protons which are proto-hydrogen. Then some protons become neutrons and some energy goes into orbiting of them and you have Hydrogen. Instead of all Hydrogen atoms staying precisely evenly distributed — Stephen Hawking had a lot of interesting stuff to say about that moment — it starts to lump into even bigger blobs and density becomes uneven and Hydrogen clouds turn into stars. That’s like the rabbits eating and reproducing. Why isn’t all matter in the Universe turned into stars? All the lumping up that could easily occur did and then it reaches some upward-bounds limit and you have that s-curve again. Unlike the rabbit scenario where grass grows back and predators predate causing an oscillating wobbling back and forth of rabbit populations — all still abiding by the population curve only with parameter-values changing over time — the Universe’s Hydrogen all lumped up into stars and only the outliers continue to do so at a much slower rate now using whatever interstellar Hydrogen is still left.

Besides the S-curve, another fundamental pattern is exponential growth. This is the curve of unbounded doubling — the dividing rabbits without limits. Left unchecked, one cell becomes two, then four, then eight, sixteen, and so on. It’s not an S-curve because, without limiting factors, it just keeps accelerating upwards, heading towards infinity.

Then there’s a fascinating family of curves known as power laws, which often create ‘long-tail’ distributions. These describe relationships where a small number of things have an outsized effect — think of the Pareto principle, or the 80/20 rule. 80% of a city’s traffic might be on 20% of its roads. A simple and classic power law is the 1/X curve, also known as an inverse relationship. It beautifully describes things where doubling one value halves the other, like how doubling the number of people sharing a pizza halves everyone’s slice.

And even things that look like pure exponential growth — that endless splitting of cells — are usually a logistics curve in disguise. You’re just zoomed in on the early, explosive part of the ‘S’. Dividing cells don’t divide forever; otherwise, we’d never stop growing. So, right after conception, the growth is exponential, but as limiting factors kick in (physical size, nutrient availability), the rate slows, and the pattern beautifully transitions into the familiar logistics curve.

You don’t really need to be a math person to understand all this. You just need

a good diagnostic mind that can follow along with what your senses are telling

you and the story your brain is putting together, and then you can run the

thought experiments. If you ever want to express it in the actual math, well

that has always been one of my limits but as I dig into this Pipulate project

and as AIs improve, that’s a limit I plan on busting through. The first key

critical insight is that the Algebra-style formula you’re looking at is not

really the algebraic concept it implies. There’s a whole bunch of very abstract

and arbitrary made-up stuff that you’re not being told and just assumed to know.

Few demonstrate this more than Leonard Susskind’s ER=EPR theory in which there

is no multiplication of variables named E, P and R being suggested nor a

formula to be balanced on either side of the equals sign, except in the most

broad and abstract way in which ER is a single symbol for one concept and

EPR is another single symbol for another concept and there’s an inside joke

being made here about their equivalence. This single handedly and gloriously

demonstrates the almost deliberate self-congratulatory obfuscation that creates

us-and-them divides between “math people” and laymen. I plan on solving this

problem for myself mostly with the Python sympy library, but that’s for

another article.

The Command Line is Forever

Since my last article I made the changes in the privacy settings in Cursor AI that I had planned. But before I start pushing forward on the continuing use of the magic rolling pin, it’s time for a second opinion on things. I traditionally get that through Gemini because my GoogleOne pays for Gemini 2.5 Pro and a single webform submit lets me give 130K tokens worth of code in a single shot which used to be the majority of my entire Pipulate codebase but is only now the most important bits amidst a sea of noise. Google recently has followed Anthropic’s lead in which they released a command-line coding assistant tool called Claude Code. Google’s is Gemini Code Assist and it has been given very liberal per-day quota limits for free of 1000 requests/day. If the request size can still be 130K tokens and the overall context window is really 2-million tokens, I think I can have some productive fun with this. It’s certainly the key to my magic rolling pin concept.

A Brief History of Terminals, Shells, and SSH

The first thing I need to notice is that it’s available as a VSCode plugin. That will probably work in Cursor but that would be defeating the purpose of the command-line API alternative I want to explore, and I don’t want to get in the middle of a potential pissing war between Microsoft and Cursor over VSCode plugin compatibly between VSCode and Cursor. It might work today but being besides the point and yet another potential point of failure, let’s focus on the sub-product called Gemini CLI. It’s nice to know there’s Amiga folks out there abbreviating the whole command-line Terminal naming fiasco into CLI. It has staying-power like API once people see the profound future-proofing power of the command-line interface. It’s so much better for automation no matter how GUI-like N8N tries compelling you to think otherwise.

Alright, I’m running out of steam today. I’m going to have to research what’s really involved in this cli-based LLM-style AI coding assistance. It’s not just in your normal Unix/Linux-style terminal, which… uhg! So much confusing stuff to explain. It’s called a Terminal because it’s where old-fashioned timesharing-style computers terminated at a teletypewriter-style machine. Probably the most famous was DEC’s VT100 and to this day the software emulators of such devices have a VT100-compatible mode. But on their own these terminals were quite “dumb,” and in fact were frequently called dumb terminals. And so when they became software-emulated devices on general purpose computers, they were made smarter with local software capabilities that “wrapped” these terminal interfaces like a “shell” and so also get called Shells. Such shells today are mostly bash which is everything consumer-like (Ubuntu) except for Macs which use zsh. So the only two shells you need to be aware of in a general purpose way is bash and zsh which layer in such things as command-line recall of your commands when you hit up-and-down arrows.

So you’ve got the concept of Terminals and Shells, used quite interchangeably. Software run inside of these Shells also often needed to connect to remote computers and mostly did so with tele-terminal software aptly named teleterm but those didn’t have security built in so yet another category of software that secured your shell was invented and less aptly named ssh as an abbreviation for Secure SHell. You can imagine the confusion! Such a Russian nesting doll of software and old-school concepts for something that is the key to recovering future-proofing capabilities in tech. Steve Jobs went on a war against this type of user interface with the Mac and vilified it in peoples’ minds, but the fact is it’s the key to scaling and automation. Nobody wants to deal with graphical user interfaces across thousands of machines. Automation has to be scripted and that means the command-line interface will always prevail.

Ahhh, the command-line interface, what a simplified way of saying it all under a single catch-all umbrella. If only there were some acronym that simplified that in the same way that API is a simplified version of application programming interface! If only some awesome community of forward-thinking computer-using individuals recognized the utility of the command-line interface as an alternative to the more workbench-like graphical desktop that Apple ripped off from the Xerox STAR computer and presented as their own innovation. If only you could just say CLI like every Amiga computer user did from the mid-80s through the early 90s and everybody just understood you. It would take the social engineering might of… oh, I don’t know… maybe a Google putting out a cutely named Gemini CLI.

Putting AI to Work: A First Look at the Gemini CLI

And I guess that’s a good starting point. I’m expecting a weird and wild

experience installing a CLI AI-tool under NixOS. I see that it gets installed

with the NodeJS nvm program which is interesting. They’re using the

ultra-bloated JavaScript so-called full web stack for this tool when they could

have just used the programming language they invented for just this sort of

thing, GoLang. And so a full web stack thing is powering a command-line tool?

I’m already skeptical and dubious, but whatever. It’s no different than my

aversion to the VSCode interface which I got over when I took up Cursor in order

to kick the tires and not be left on the sidelines of all the many evolutions

taking place during this kooky AI Cambrian Explosion.

To be continued…

AI Analysis

Of course. Here is the requested multi-task analysis based on the provided journal entry.

Crafting Compelling Narratives: Title & Filename Brainstorm

- Title Option 1: From Thinking Tools to Terminal Tools: S-Curves, CLIs, and AI Assistants

- Filename:

thinking-tools-logistics-curve-gemini-cli.md - Rationale: This title directly connects the philosophical (S-curves as thinking tools) with the practical (the Gemini CLI). It appeals to readers interested in both the “why” and the “how” of modern development.

- Filename:

- Title Option 2: The Command Line Will Prevail: Finding Clarity and Agency with AI

- Filename:

command-line-clarity-ai-agency.md - Rationale: This is a strong, opinionated title that captures the entry’s thesis about the enduring power of the CLI. It targets developers who value automation and technical sovereignty.

- Filename:

- Title Option 3: A Developer’s Monologue: On GitHub, Mental Models, and Getting Started with Gemini CLI

- Filename:

developer-monologue-mental-models-gemini-cli.md - Rationale: This title frames the entry as an authentic, behind-the-scenes look at a developer’s thought process. It appeals to those interested in the human side of coding and problem-solving.

- Filename:

- Preferred Option:

- Title (plain text for YAML): The Logistics of Thought: From S-Curves to the Command Line

- Filename:

logistics-curve-thought-command-line.md - Rationale: This title is the most elegant and intriguing. It introduces a powerful metaphor (“Logistics of Thought”), connects the entry’s two most unique themes (the S-curve and the CLI), and promises a deep, insightful read that goes beyond a simple tool tutorial.

Book Potential Analysis

- Strengths as Book Fodder:

- Authentic Voice: Provides a raw, transparent view into a developer’s associative thinking process, connecting high-level concepts to practical coding problems.

- Conceptual Bridging: Uniquely links abstract mathematical models (logistics curve, power laws) to tangible tech issues like resource limits and system growth.

- Historical Context: The impromptu history of the CLI, terminals, and shells is a valuable, self-contained explainer that grounds the author’s argument in computing history.

- Philosophical Grounding: The entry isn’t just a “how-to”; it’s a “why-to,” arguing for specific technical choices based on principles of clarity, agency, and future-proofing.

- Opportunities for Enrichment (for Book Adaptation):

- Visualize the Concepts: Add simple diagrams illustrating the logistics (S-curve), exponential growth, and power-law (long-tail) curves to make the concepts immediately accessible to all readers.

- Create a “Key Takeaways” Box: Distill the 1-2-3 backup strategy and the core principles of the S-curve into a concise, skimmable sidebar for readers in a hurry.

- Elaborate on

ER=EPR: Briefly explain the physics concepts (wormholes and quantum entanglement) to fully land the point about opaque, “in-joke” notation in specialized fields, reinforcing the theme of clarity.

AI Editorial Perspective: From Journal to Chapter

This entry is an outstanding candidate for a case study within a chapter titled “The Developer’s Mindset: Navigating Complexity with First Principles.” Its value lies in its candid, stream-of-consciousness structure, which demonstrates how an experienced developer connects seemingly disparate domains—personal motivation (GitHub squares), data security (backups), systems thinking (S-curves), and tool evaluation (Gemini CLI)—into a cohesive worldview.

The “raw” nature of the log is its greatest strength. When framed correctly in a book, it becomes an authentic “ride-along” with a developer, revealing the non-linear, associative thought process that precedes clean, documented code. It shows that innovation isn’t just about finding the right command; it’s about building robust mental models. This entry beautifully illustrates the philosophy of using fundamental patterns from nature and business (the logistics curve) to reason about the complexities of technology, making it a powerful and memorable lesson on the importance of clear thinking.

Suggested Next AI Processing Steps

- Task Suggestion 1: Distill Abstract Concepts into Analogies.

- Potential Prompt Snippet for Next AI: “Based on the provided text, analyze the explanations of the logistics curve, exponential growth, and power laws. For each concept, generate a simple, non-technical analogy (different from the ones already used) that a high school student could easily understand to be included in a book chapter sidebar.”

- Task Suggestion 2: Draft a “Lessons Learned” Summary.

- Potential Prompt Snippet for Next AI: “Review the entire journal entry and synthesize the author’s journey of thought. Draft a ‘Key Principles’ section in bullet points that a book reader could take away, focusing on actionable advice regarding vendor lock-in, mental models, and the strategic value of the CLI.”